Building upon earlier work with semantic search, OpenSource Connections is excited to unveil exciting new possibilities with Solr-based product recommendation. With this technology, it is now possible to serve user-specific, search-aware product recommendations directly from Solr.

In this post, we will review a simple Search-Aware Recommendation using an online grocery service as an example of e-commerce product recommendation. In this example I have built up a basic keyword search over the product catalog. Weve also added two fields to Solr: purchasedByTheseUsers and recommendToTheseUsers. Both fields contain lists of userIds. Recall that each document in the index corresponds to a product. Thus the purchasedByTheseUsers field literally lists all of the users who have purchased said product. The next field, recommendToTheseUsers, is the special sauce. This field lists all users who might want to purchase the corresponding product. We have extracted this field using a process called collaborative filtering, which is described in my previous post, Semantic Search With Solr And Python Numpy. With collaborative filtering, we make product recommendation by mathematically identifying similar users (based on products purchased) and then providing recommendations based upon the items that these users have purchased.

Now that the background has been established, lets look at the results. Here we search for 3 different products using two different, randomly-selected users who we will refer to as Wendy and Dave. For each product: We first perform a raw search to gather a base understanding about how the search performs against user queries. We then search for the intersection of these search results and the products recommended to Wendy. Finally we also search for the intersection of these search results and the products recommended to Dave.

Here are the results:

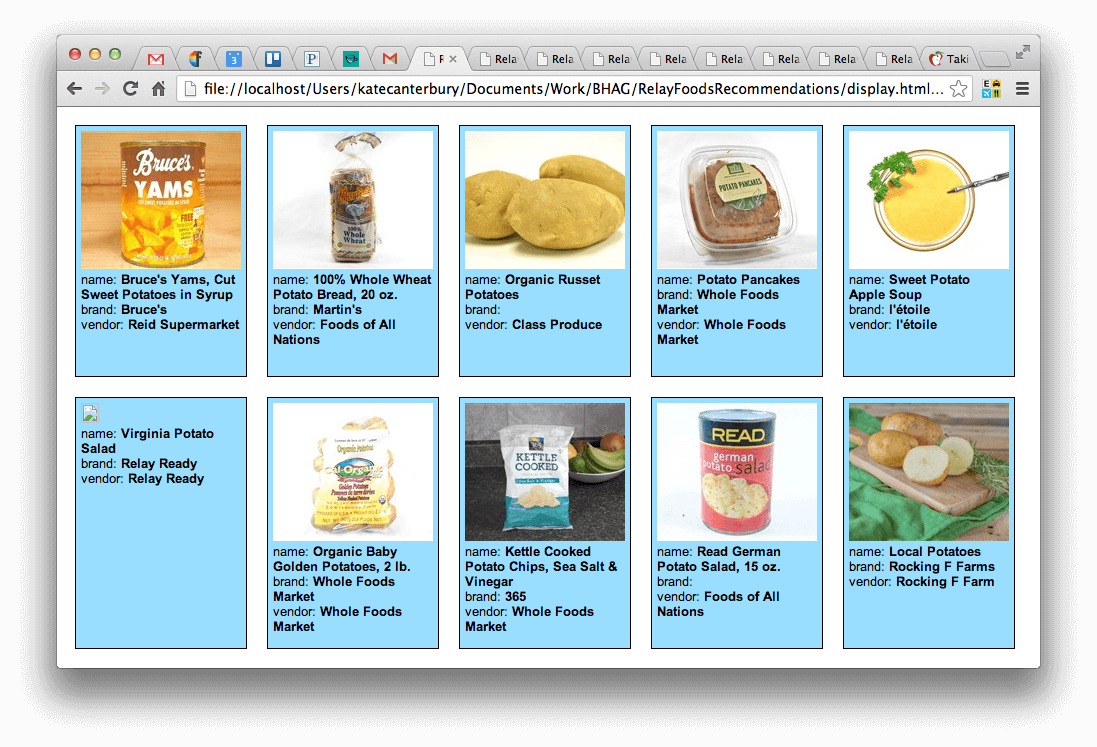

Potatoes

SOLR_URL: http://localhost:8983/recommendation?q=potatoes

Canned yams, potato bread, and other potato derivative products. These are unusual results, because when one searches for potatoes… they just want potatoes.

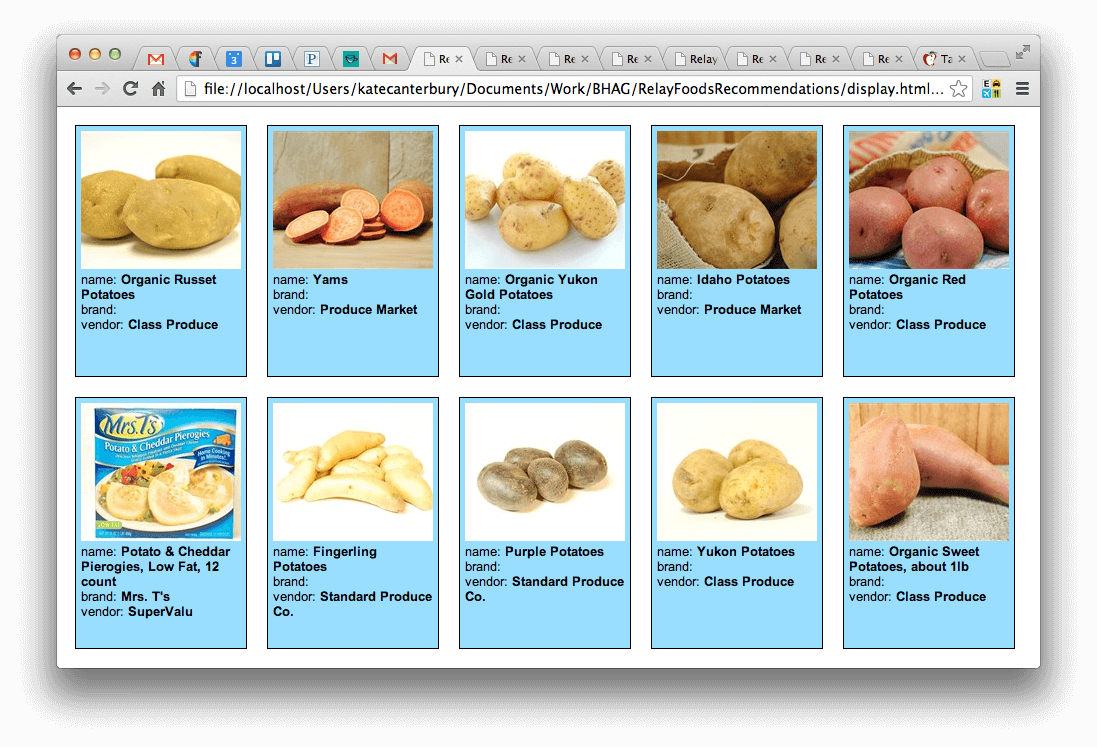

Wendys Potatoes

SOLR_URL: http://localhost:8983/recommendation?q=potatoes&fq=recommendToTheseUsers:46f7a9a9-c661-4497-81a9-64ed537b65b0

Much better; with the exception of one item, everything pictured here is a potato.

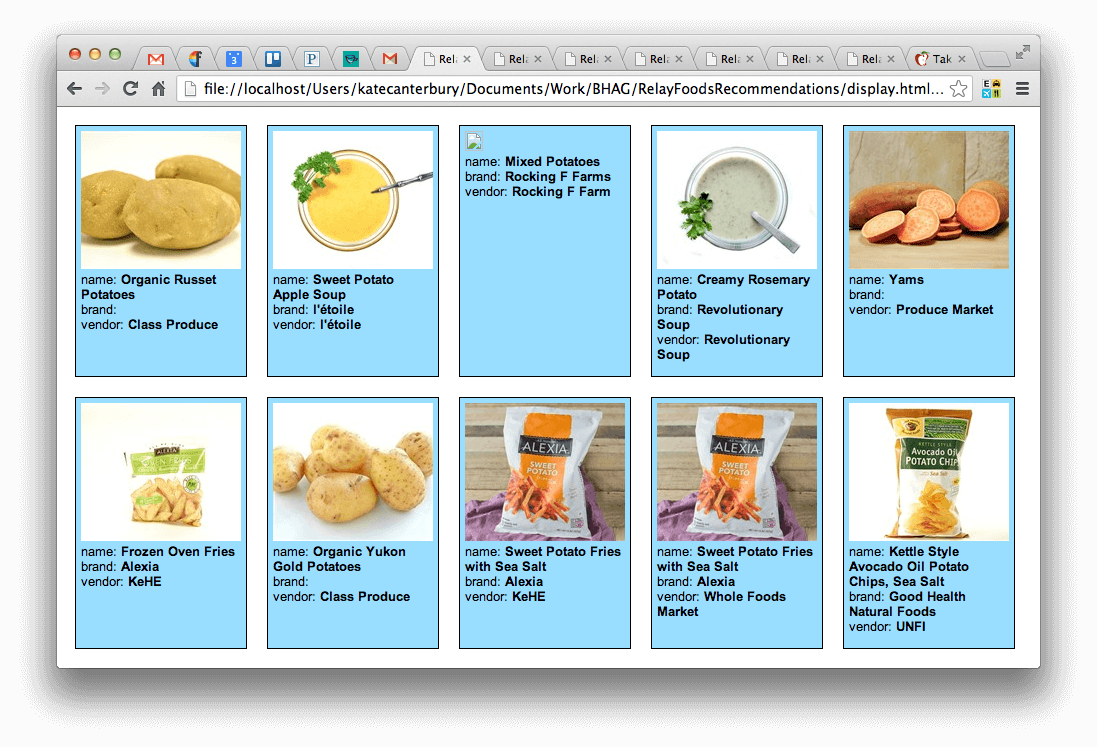

Daves Potatoes

SOLR_URL: http://localhost:8983/recommendation?q=potatoes&fq=recommendToTheseUsers:973990af-1482-4b63-a83c-bd2d8fc1ff32

Daves apparently a convenience shopper – besides actual potatoes, we have soups, frozen french fries, chips – perfectly reasonable!

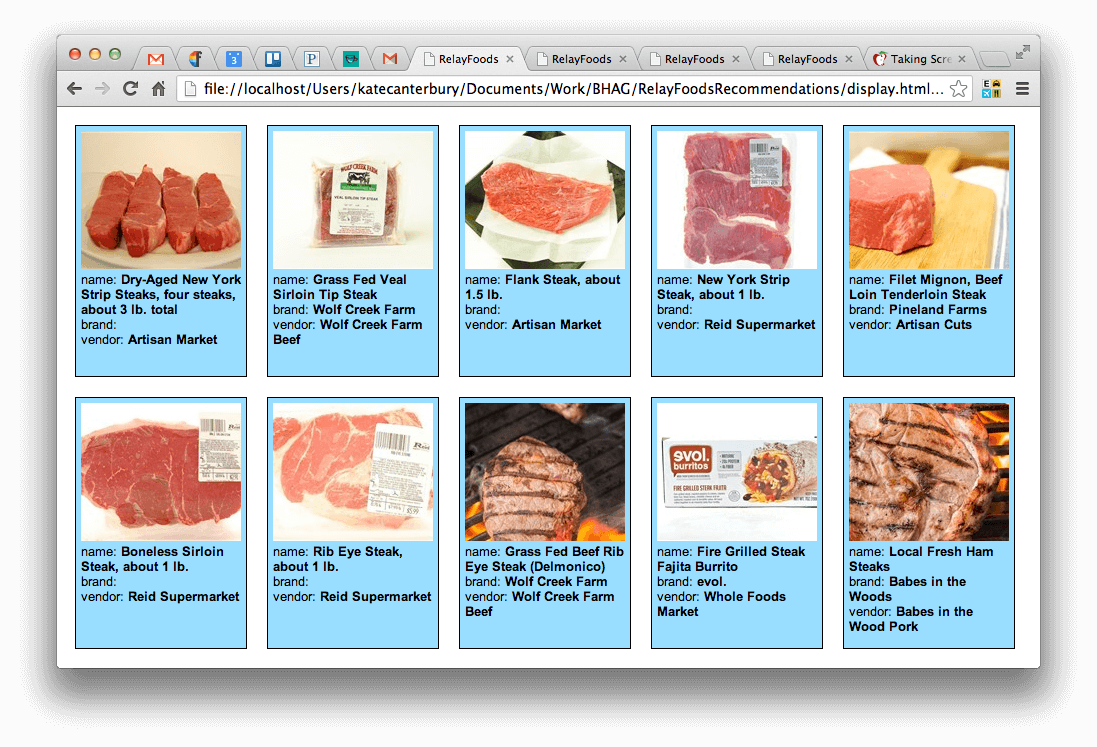

Steak

SOLR_URL: http://localhost:8983/recommendation?q=steak

Plenty of steak here – though there are a few things that arent steak. Whats the deal with that first result? Taco mix?

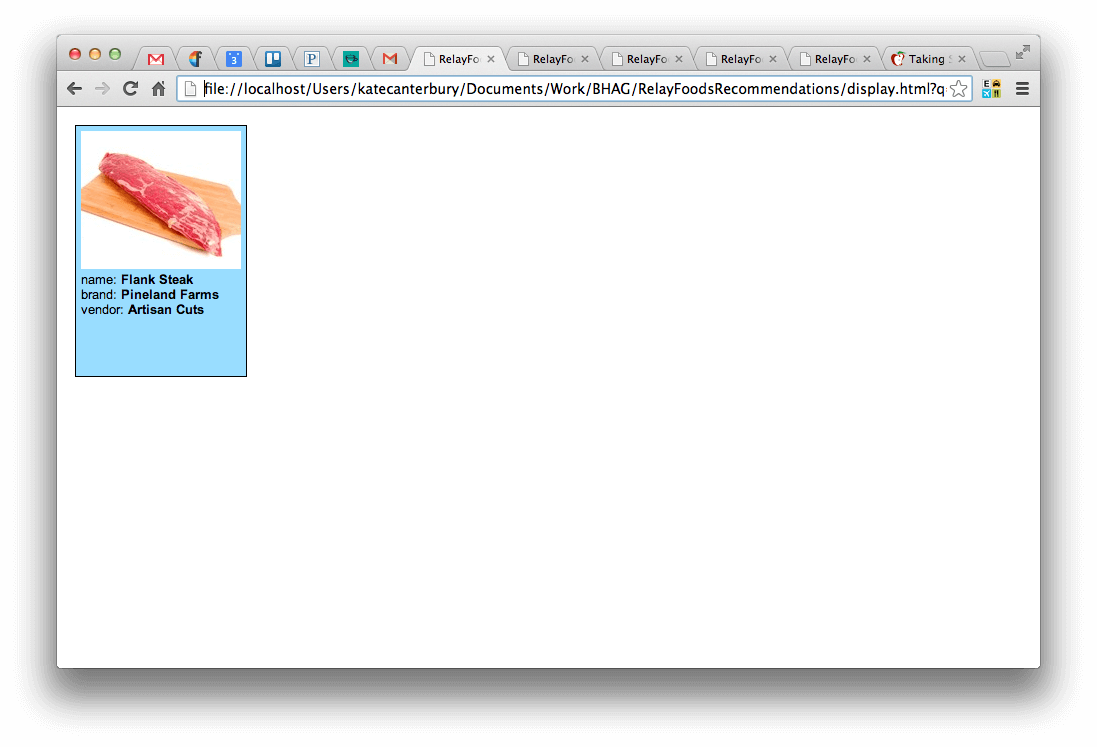

Wendys Steak

SOLR_URL: http://localhost:8983/recommendation?q=steak&fq=recommendToTheseUsers:46f7a9a9-c661-4497-81a9-64ed537b65b0

Uh-on, only one steak recommendation for Wendy. Does this mean that the recommendation system has failed her? Not really. She probably doesnt grill much. And not having bought related products in the past, the recommender lacks sufficient information to make any more than one recommendation. But, when Wendy finally does search for steak, we can at least be assured that a this steak will be at the top of her search results rather than the Taco seasoning from the raw search.

Daves Steak

SOLR_URL: http://localhost:8983/recommendation?q=steak&fq=recommendToTheseUsers:973990af-1482-4b63-a83c-bd2d8fc1ff32

Dave, on the other hand, apparently loves to grill. The richness of these recommendations indicate that his spending habits are probably akin to those Tim the Tool Man Taylor.

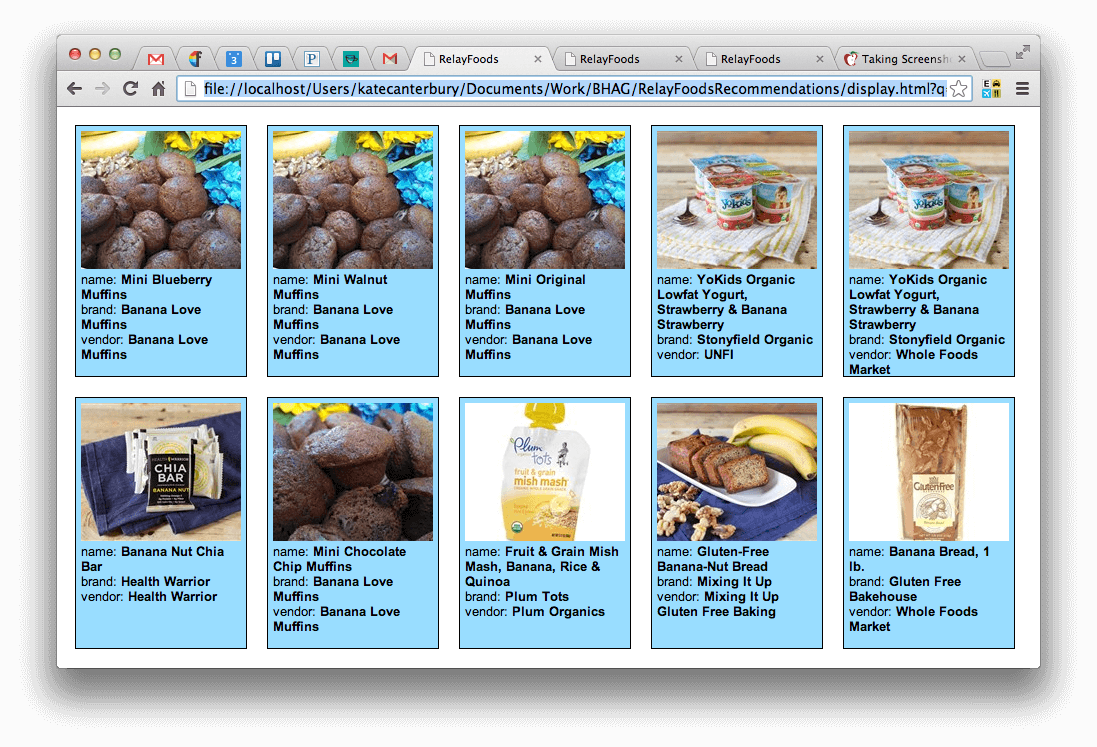

Bananas

SOLR_URL: http://localhost:8983/recommendation?q=banana

Those mushroom looking things are muffins that contain bananas. No wait… theyre actually muffins that dont contain bananas; rather theyre produced by a company that has “banana” in their name. As a matter of fact, the only bananas on this page are decorations surrounding the actual product. Hm… :-/

Wendys Bananas

SOLR_URL: http://localhost:8983/recommendation?q=banana&fq=recommendToTheseUsers:46f7a9a9-c661-4497-81a9-64ed537b65b0

Yumm… bananas.

Daves Bananas

SOLR_URL: http://localhost:8983/recommendation?q=banana&fq=recommendToTheseUsers:973990af-1482-4b63-a83c-bd2d8fc1ff32

Here we again see several different options for bananas. The other non-banana results arent necessarily bad results; remember that above, Dave appeared to be somewhat of a convenience shopper, and banana chips and banana bread are certainly consistent with that.

Incorporating Solr Search-Aware Product Recommendations into Your Search

For even a moderately large inventory and user base, building the recommendation data is a relatively straightforward process that can be performed on a single machine and completed in minutes. Incorporating these recommendations into search results is as simple as adding a parameter to the Solr search URL. In every case outlined above, the recommended subset is clearly better than the full set of search results. Whats more, these recommendations are actually personalized for every user on your site! Incorporating this functionality into search will allow customers to find the items they are looking for much more quickly than they would be able to otherwise.

Want to Improve Your Conversion Rates?

We are currently seeking alpha testers for Solr Search-Aware Product Recommendation. If you are interested in trying out this technology then please contact us. We will review your current search application, get you set up with Search-Aware Product Recommendation, and help you collect metrics so that you can measure the corresponding increase in conversion rate.