A few years ago John Berryman and I experimented with integrating Latent Semantic Analysis (LSA) with Solr to build a semantically aware search engine. Recently I’ve polished that work off, integrated it with Elasticsearch, and sunk my teeth in a few levels deeper. I wanted to get a sense for whether this technique could be made really useful for building semantically aware search applications – and how exactly to do that. It turns out getting LSA to work requires a lot of data cleaning, feature modeling, and careful tuning.

What is LSA? How might it improve search relevance?

So we all know how a search engine works. Users enter search terms and the search engine uses an index to match documents that contain those terms. Search engines work well, but require very strict term matching. Search engines don’t know, without help, that “cat” and “kitty” correspond to similar ideas.

Looking at the search documents another way, you can discern relationships between terms and documents that get at broader ideas. One way to view these documents is as a big term-to-document matrix that maps each document to each term. Each entry mentions how often each term occurs in each document. Ala something like this:

TermDocMatrix:

| doc1 | doc2 | doc3 | doc4 | |

|---|---|---|---|---|

| cat | 0 | 1 | 0 | 2 |

| kitty | 0 | 2 | 0 | 0 |

| the | 6 | 5 | 4 | 5 |

| dog | 2 | 0 | 1 | 0 |

| pooch | 3 | 0 | 3 | 0 |

If you look at this matrix, patterns jump out at the savvy reader. Some documents clearly are about cats (docs 2 and probably 4). Other documents are about dogs (1 and 3). One might even look at this matrix and realize that the word “kitty” is pretty closely related to “cat.” Armed with this insight, we might have the capacity to take user searches for “kitty” and return document 4, as it seems to have a very strong affinity to the idea of “catness” without.

Latent Semantic Analysis is one technique that attempts to recognize these patterns. Latent Semantic Analysis runs a matrix operation called Singular Value Decomposition (SVD) on the term-document matrix. A Singular Value Decomposition can be interpreted many ways. Fundamentally, it factors the matrix into something of a simpler form. Mathematically it’s a way of saying that our matrix can be decomposed (some might say compressed) around the corpus’s main ideas:

TermDocMatrix = catness + dognessMost importantly, when you look into “catness” and “dogness” you’ll see the components give you insight into that topic’s overall strength, each term’s affinity for that topic, and each document’s affinity for that topic, as in:

catness = <eachTermsCatness> * corpusCatnessStrength * <eachDocsCatness>Here

- eachTermsCatness: a vector that corresponds to every term’s affinity for the “cat” idea

- eachDocsCatness: corresponds to every document’s affinity for the “cat” idea

- corpusCatnessStrength: how strongly catness occurs in the corpus

It’s beautiful! If we look at “eachTermsCatness” we might see something listing every term in our corpus and its affinity to the idea of “cat”

<cat: 2.0, kitty: 1.0, the: 0.0, banana, pooch: -2.01, dog:-2.0~>And each document’s “catness” has a similar look and feel, only with elements for each document:

<doc4: 2.0, doc 2:1.9, doc3:-1.9, doc1:-2.0>And when you multiply these together, you get a rough affinity for each term-document pairing and the “catness” idea. Perhaps something like

| doc1 | doc2 | doc3 | doc4 | |

|---|---|---|---|---|

| cat | 0 | 0.6 | 0 | 0.5 |

| kitty | 0 | 0.4 | 0 | 0.3 |

| the | 0 | 0 | 0 | 0 |

| dog | -0.4 | 0 | -0.3 | 0 |

| pooch | -0.6 | 0 | -0.7 | 0 |

If we kept going, and added back in the other components (like dogness), we get back to our original matrix:

| doc1 | doc2 | doc3 | doc4 | |

|---|---|---|---|---|

| cat | 0 | 1 | 0 | 2 |

| kitty | 0 | 2 | 0 | 0 |

| the | 6 | 5 | 4 | 5 |

| dog | 2 | 0 | 1 | 0 |

| pooch | 3 | 0 | 3 | 0 |

In other words, Latent Semantic Analysis should let us break up a big term-document matrix into constituent, semantically meaningful parts. We can say, this giant matrix can really be factored into these 50 topics. And instead of us asserting the topics they’re flushed out of the matrix.

We should be able to use this to do a lot of smart things to improve search relevance:

- Detect synonyms – noticing kitty is closely related to “cat” and perhaps synonymous

- Cluster terms – which ideas go well together: “cat” “kitty” etc

- Cluster documents – which documents seem to carry similar topics – noticing docs 2 and 4 are “cat” documents

- Automatically tag documents – noticing docs 2 and 4 are “cat” docs, you might choose to tag them with that topic to organize information

- Remove unimportant topics from the index. I said above you can reconstruct the matrix from the topics. What if you set the strength of some of the weaker topics to “0.” Ignoring unimportant topics also helps the performance of many of the computational steps

- Compress the term-document matrix. Term-document matrices are notoriously sparse, so by representing a 1000×1000 matrix by a 50 document to topic and topic to term vectors saves tremendous amount of space.

It should be the best thing since sliced bread, right?

The LSA Bread is Not Pre sliced

The last section mentioned the “theory” (or marketing) of why LSA is so awesome. In reality, LSA takes a great deal of work, trial and error to get results that seem correct to expert human eyes. (In fact, I suppose now is as good a time as any to say the results above are entirely contrived for illustrative purposes :-p ).

I want to detail the hands-on practical realities of LSA. It’s not as magical awesome sauce as you might be led to believe. In fact, without careful attention by someone that knows what they’re doing, you’re probably just as likely to screw up search relevance as improve it. Let’s see why.

Stopwords

Indeed, my example above is contrived and flawed a bit on purpose. You’ll notice I left out a huge component of the text. The use of the word “the.” The SVD matrix operation has no idea that “the” is a pretty meaningless word in English. In reality, the matrix above might get decomposed into something like:

TermDocMatrix = theness_catness + dognessHere, theness_catness is a blend of the word “the” and the cat words. This happens because if you notice in the matrix above, the stopwords occur slightly more frequently in cat documents than dog documents.

Another way to look at this is to understand that the SVD operation underlying LSA aims to decompose the matrix for later reconstruction. It acts as a compression operation. Accounting for “the” is just as important as accounting for our intuitive topics: cats and dogs.

Zipf’s Law vs LSA

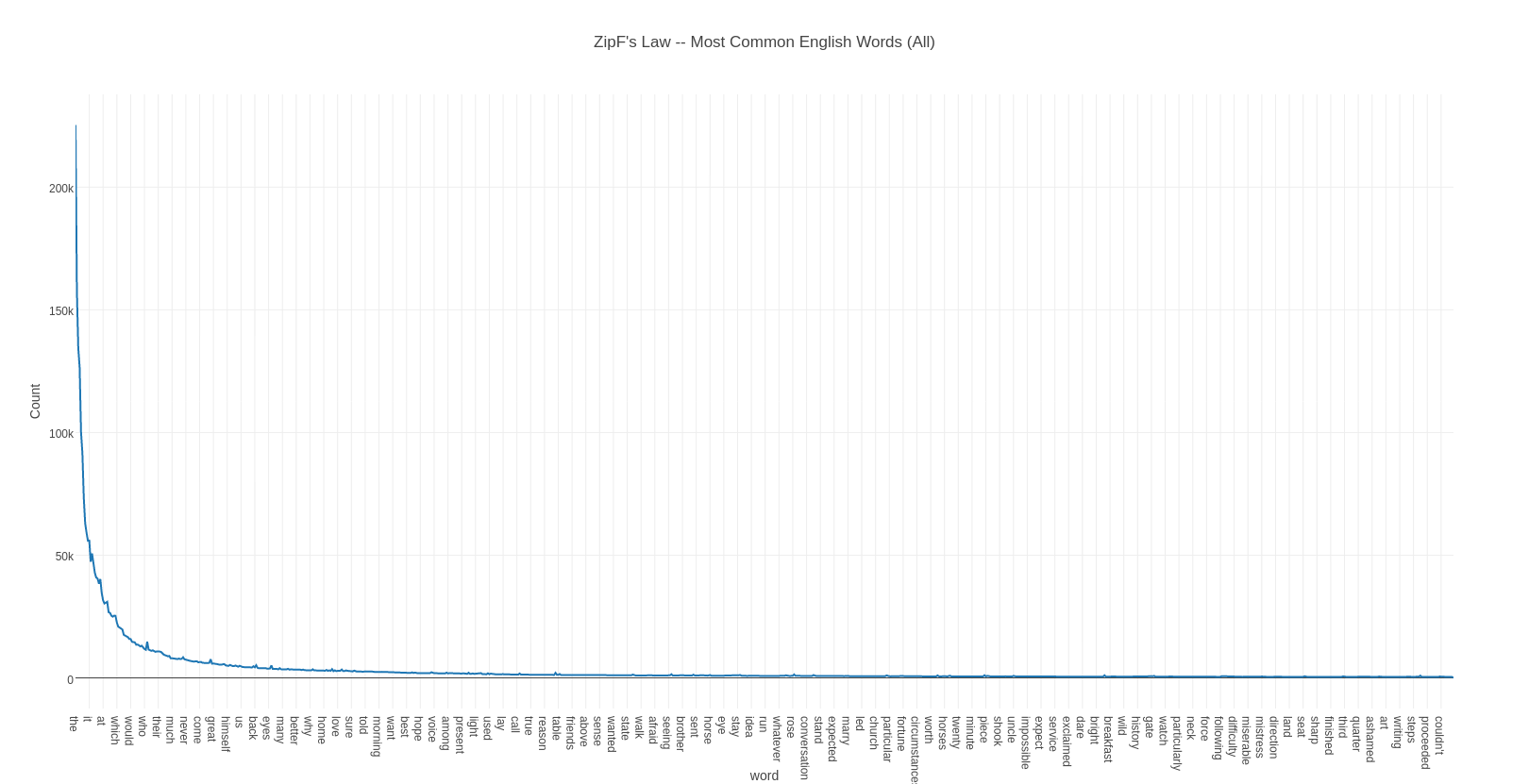

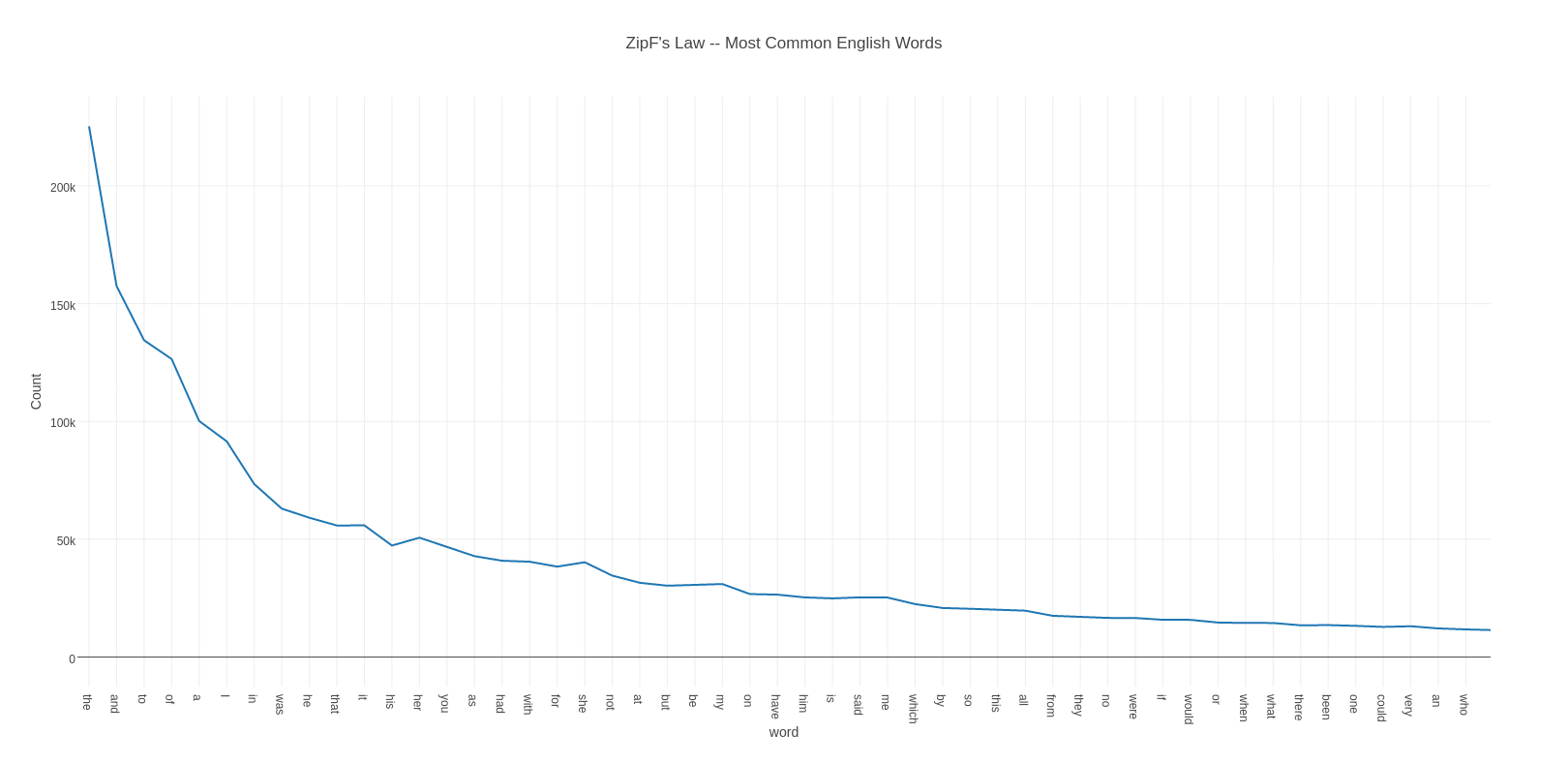

Ok but maybe our matrix is just something weird about “cat” docs. We can generally ignore this problem for larger corpuses, right? No. Given the statistical distribution of words in documents, the problem becomes starker. Let me introduce you to my little friend, Zipf’s law. Zipf’s law states that the frequency of terms in a corpus of natural language follows the rather stark Zipfian distribution, as shown in the two graphs below:

Zooming in on the most common words:

How terms occur in text follows the The Zipfian distribution, as shown above. Some words occur very frequently, and the drop off is very fast. If “the” occurs say one in every 10 words with “cat” and “dog” 1 in 1000, it’s not clear LSA can actually model meaningful topics through the noise of stopwords. Let’s walk through why.

Compare a 100 word document to a 50 word document:

100 word document: (cat: 1, the: 10)

50 word document: (cat: 1, the: 5)When you include other traditional stopwords, the picture gets noisier

100 word "cat" document: (cat: 1, the: 10, a: 5, of: 4)

50 word "cat" document: (cat: 1, the: 5, a:3, of: 2)The feature with the greatest variability in our corpus is the relative occurrence of stopwords due to document length. Further, long documents have more unique words, which means even more potential for stopwords.

Because of this, matrix factorization notices the biggest difference in documents is the number of stopwords. As this is due to length, it causes the most prominent topic to be stopwords that occur in long documents, as shown in this decomposition:

TermDocMatrix = long_stopword_docs + catness + dognessAnother way of saying this is SVD assumes in a given document terms will be normally distributed across documents. The word “the” should just be noise to SVD if our documents present a nice bell curve of “the” occurrences. But that’s not how “the” is distributed in English text, and that causes LSA to focus on these large “the” differences document to document. The distribution of “the” is extremely sensitive to length where the distribution of cat is is extremely resistant to modifications in document length.

More Stopwords Than Meet The Eye

Ok if you’re screaming “no duh” remove the stopwords already. Everyone knows stopwords are bad!

The problems with Zipfian don’t stop at traditional stopwords. Other terms, what I’ll call pseudo-stopwords, are words clearly unrelated to what we’d consider meaningful topics. Yet these psuedo-stopwords live in a big middle gray area in the Zipfian distribution of useful English text without being overly specific.

For example, after removing the obvious English stopwords (the, a, of, etc), the gender of the text comes through as the next most prominent documents (notice it in the Zipfian graph above). Documents written about men, using very commonly used words like “he,” “him,” “his” surface. Or for Question-and-Answer text like scifi stackexchange, different ways of asking questions form their own topics (“who” vs “what”).

Words like “he” and “what” still occur very far to the left in the Zipfian hierarchy. So just like stopwords, they vary significantly with length, giving you a topic decomposition like:

TermDocMatrix = he_docs + she_docs + catness + dognessYet it’s not even that clean, you’re just as likely to get your cat and dog topics munged into the he/she documents. For example, if more people write about their female cats and male dogs:

TermDocMatrix = he_dog_docs + she_cat_docsThe situation seems hopeless!

No Duh: It’s called TF*IDF …. Right

You’re probably still screaming at your screen at me. Everyone knows you should use TF*IDF, not term frequency directly to score common terms lower than rare, specific ones.

What is TF*IDF? The classic way search engines and NLP account for language’s Zipfian distribution is to divide by a term’s “document frequency.” Terms like “the” occur in every document. So in our cat/dog example above a single “the” in a document is really a ¼ (one occurrence over four documents). Cat is more rarely used, so a single cat occurrence in a document gets scored as ½.

Our goal is to make the stopwords and pseudo-stopwords appear as noise, with no impact in the topic modeling, while our important words “cat” and “dog” really stick out.

Using direct TF / DF, you’d get this matrix:

| doc1 | doc2 | doc3 | doc4 | |

|---|---|---|---|---|

| cat | 0 | 1/2 | 0 | 2/2 |

| kitty | 0 | 2/1 | 0 | 0 |

| the | 6/4 | 5/4 | 4/4 | 5/4 |

| dog | 2/2 | 0 | 1/2 | 0 |

| pooch | 3/2 | 0 | 3/2 | 0 |

Restated:

| doc1 | doc2 | doc3 | doc4 | |

|---|---|---|---|---|

| cat | 0 | 0.5 | 0 | 1.0 |

| kitty | 0 | 2.0 | 0 | 0 |

| the | 1.5 | 1.25 | 1.0 | 1.25 |

| dog | 1.0 | 0 | 0.5 | 0 |

| pooch | 1.5 | 0 | 1.5 | 0 |

This helps quite a bit. The problem is less pronounced than using term frequency directly. But dividing by document Frequency doesn’t account for document length, you’ll still have long documents with higher TF*IDF scores for stopwords. In other words, DF doesn’t go far enough to ameliorate a situation like our “cat” vs “stopwords” example from above:

100 word "cat" document: (cat: 0.5, the: 2.5, a:1.25, of: 1.0)

50 word "cat" document: (cat:0.5, the: 1.25, a:0.75, of: 0.5)

(assuming cat has DF of 2, the of 4)The longer documents still have quite a few more stopwords (the, a, of…) despite their TF*IDF score. So LSA still groups documents with a bias towards longer documents.

Accounting for Document Length

For tuning against document length, you have a couple of options. The most obvious thing to do is to bias towards shorter documents. For example, divide a term’s TF*IDF score by the total number of terms in that document. This can help scenarios where very long documents create artificial stopword-heavy topics.

But consider what happens if you go too far in this direction. If “persian” is extremely rare in your animal corpus, your scores might look something like

25 word "persian" document: (persian: 5.0, he: 0.01, it: 0.15)

50 word "persian" document: (persian: 10.0, he: 0.02, it: 0.30)This can create topic biases in the other direction. Suddenly the SVD operation must account for a handful of documents with very strong “persian” features. Instead of your topics being psuedo-stopwords, they’re instead a highlight of terms too obscure to be considered natural topics.

The situation is much worse if you consider the occasional mention of off-topic words. For example, what if someone wrote about their cat Mr Puffinpants in a short document.

25 word "Puffinpants" document: (puffinpants: 100.0, he: 0.01, it: 0.15)Suddenly you get a very powerful “puffinpants” topic, all just because puffinpants is rare and mentioned prominently in a short text document.

LSA requires work!

The takeaway from all this is to get LSA to work as a topic modeling approach, you need to do a lot of tuning. If you model your document’s features with just TF or TF*IDF, expect to get topics latent to English itself. If you bias too heavily towards rare terms or short documents, expect to get topics that cover more obscure topics.

There’s a million other options we could take this, and really I could fill many more blog posts with my trials and tribulations. For example:

- Try more mature TF*IDF based calculations, such as Lucene’s TF*IDF or BM25. You’ll likely still need to tune calculations for your case, as LSA is so sensitive to tuning.

- Perhaps we shouldn’t count term frequency at all. Simply mark a 1 or 1.0 / doc freq if the term is mentioned, or a 0 if it isn’t. This would sidestep Zipf for term frequency. However long documents still contain more terms, even if they’re scored lower. Further frequent terms, though scored lower, will still occur across more documents. We try to account for by this dividing by document frequency, but for psuedo-stopwords (“he” or “she”) this sometimes isn’t enough.

- Use skipgrams from the document text as LSA’s “document”. Skipgrams group a word with the words occurring N skips after the present word. For example “Mary had a little lamb” becomes “Mary had”, “Mary a”, “Mary little”, “Mary Lamb.” This gives you word groupings in a narrow window after an initial word. However if you’re trying to detect synonyms, like “cat” ~ “kitty”, its unlikely synonyms will be used in the same sentence. Its more likely synonyms are spread out throughout a document to avoid repetitiveness in text.

- Experiment with selecting a random N words from a document. This seems like a good idea until you realize that this random selection will be biased towards Zipf. Even if you select 100 unique terms, you’re very likely to get stopwords and psuedo-stopwords.

- Limit LSA to fields that behave better than prose. In my experimentation titles, like the question titles at Stackexchange, tend to have fewer stopwords and are more “on topic” in their verbiage. This tends to have a positive impact on LSA. Trey Grainger used LSA on the user search strings in his knowledge graph experimentation, which seems to confirm my suspicions that LSA works better on titles and other “entity rich” fields.

Suffice it to say, The deeper I get into this, the more I want to experiment!

I’m curious about your ideas around using LSA! Let me know what tuning options you’ve implemented succesfully, I’m eager to learn from what you’ve tried!

LSA and the Lurking Psuedo topics

You’ll notice that the trick with LSA is landing on topics that we consider “natural.” LSA can oscilate between overly broad, vague topics like gendered pronouns and overly specific topics like documents about Mr. Puffinpants.

This, arguably, is the main feature, not the bug of LSA.

Think of it another way, imagine you’re trying to figure out what’s important in alien language. They say

"zurb zurb zeple xe"

"zow zeple xe zow"

"zub zeple zub xe"

"zow zee zoo zow"The most prominent difference here is some utterances have “zow” and some have “zurb.” If LSA told you this, you’d feel one step closer to translating an Alien language!

Unfortunately, we don’t care about general latent topics. We already know English prose is written using differently gendered pronouns. We instead want insights about our corpus about our domain, be it pets or science fiction trivia. LSA needs a lot of tuning to get there. You can get it there.

My final verdict on LSA is to set it aside for use on general prose-like text. I’m going to turn my focus towards Latent Dirichlet Allocation (LDA) produces better results with less tuning. Look for future blog posts as I learn more about LDA!

And as always, if you have any comments or if you’re interested in seeking our semantic search services, be sure to contact us!