The following is an excerpt from our upcoming book Relevant Search from a chapter written by OSC alum John Berryman. Use discount code turnbullmu to get 38% off!

Precision and recall are two fundamental measures of search relevance. Given a particular query and the set of documents returned by the search engine (the result set), these measures are defined as follows:

- Precision is the percentage of documents in the result set that are relevant.

- Recall is the percentage of relevant documents that are returned in the result set.

Admittedly, these definitions are a little hard to follow at first. They may even sound like the same thing. In the discussion that follows, we provide a thorough example that will help you understand these definitions and their differences. You’ll also begin to see why it’s so important to keep these concepts in mind when designing any application of search.

Additionally, we demonstrate how precision and recall are often at odds with one another. Generally, the more you improve recall, the worse your precision becomes, and the more you improve precision, the worse your recall becomes. This implies a limit on the best you can achieve in search relevance. Fortunately, you can get around this limit. We explore the details in the discussion that follows.

Precision and recall by example

Let’s lead with another example. And this time just to be different, let’s use, oh, I don’t know, fruit. After you recover from the wormy apple incident of the previous section, you go back to the fruit stand and consider the situation in more detail.

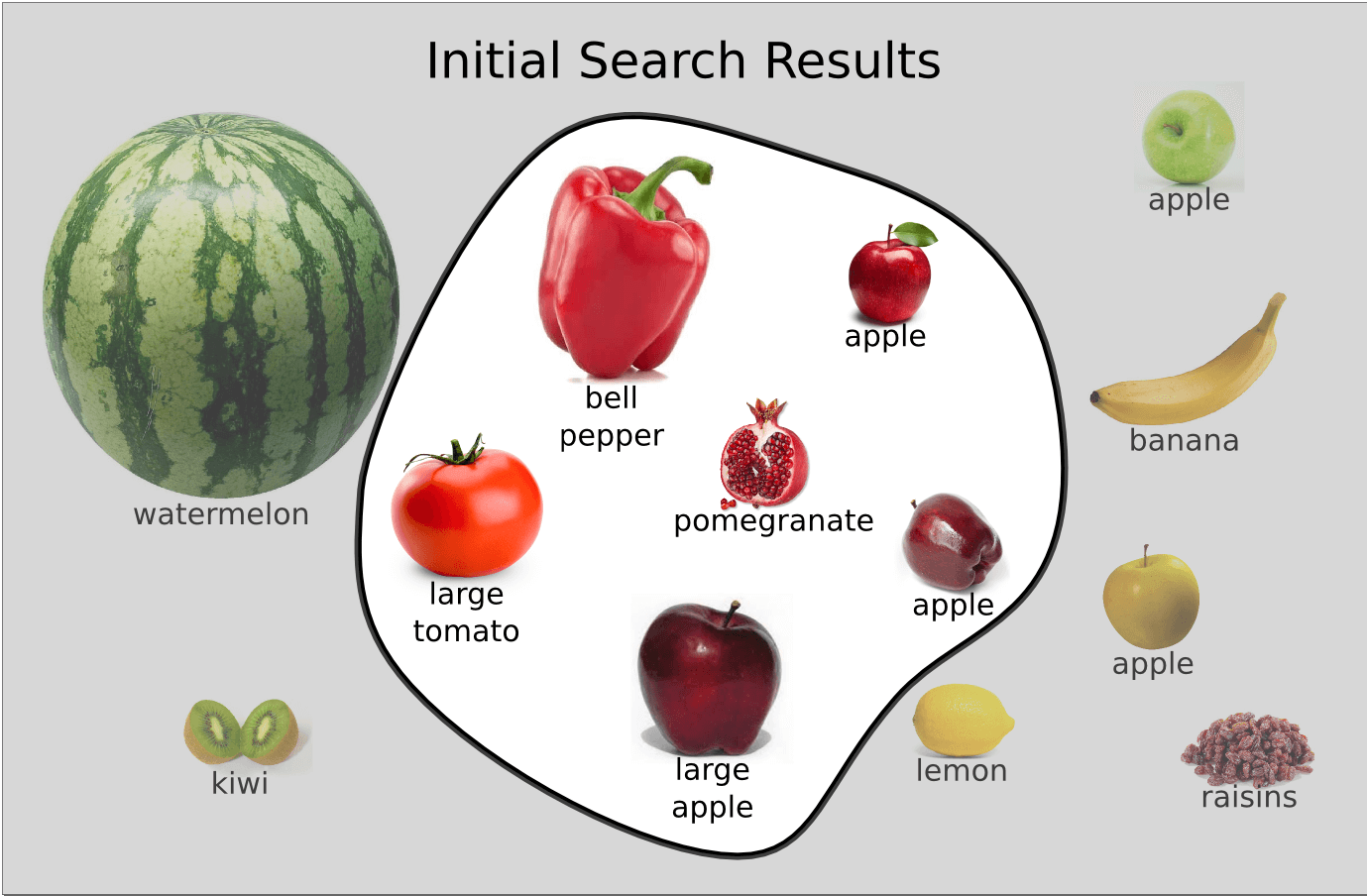

Figure 4.2 Illustration of documents and results in the search for apples

Figure 4.2 Illustration of documents and results in the search for apples

When you originally went to the fruit stand, you were looking for apples—but more specifically, your search criteria was “red, medium-sized fruit.” This criteria led you to the search results indicated in figure 4.2. Let’s consider how this result set can be described in terms of precision and recall. Looking at the search results, you have three apples and three red, medium-sized fruits that aren’t apples (a tomato, a bell pepper, and a pomegranate). Restating the previous definition, precision is the percentage of the results that are correct. In this case, three of the six results are apples, so the precision of this result set is (3 ÷ 6) × 100, or 50%. Furthermore, upon closer inspection of all the produce, you find that there are five apple choices among the thirteen fruits types available at the stand. Recall is the percentage of the correct items that are returned in the search results. In this case, there are five apples at the fruit stand, and three were returned in the results. The recall for your apple search is (3 ÷ 5) × 100, or 60%.

In the ideal case, precision and recall would both always be at 100%. But this is almost never possible. What’s more, precision and recall are most often at odds with one another. If you improve recall, precision will suffer and your search response will include spurious results. On the other hand, if you improve precision, recall will suffer and your search response will omit perfectly good matches.

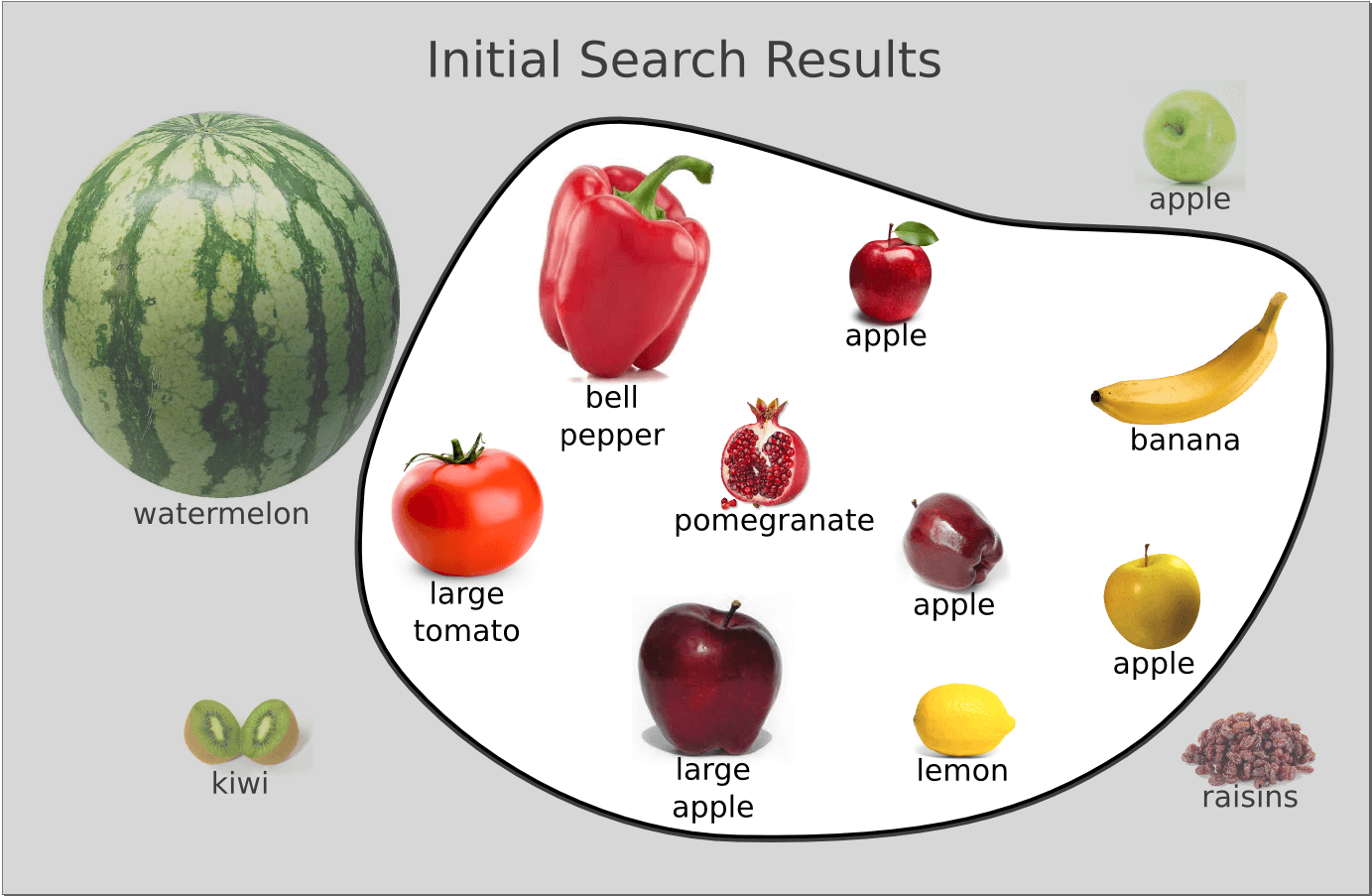

Figure 4.3 Example search result set when loosening the color-match requirements

Figure 4.3 Example search result set when loosening the color-match requirements

To better understand the warring nature of precision and recall, let’s take a look at this phenomenon in the context of our fruit example. If you want to improve recall, you must loosen the search requirements a bit. What if you do this by including fruit that’s yellow? (Some apples are yellow, right?) As shown in figure 4.3, you do pick up another apple, thus improving recall to 80%. But because most apples aren’t yellow, you’ve picked up two more erroneous results, decreasing precision to 44%.

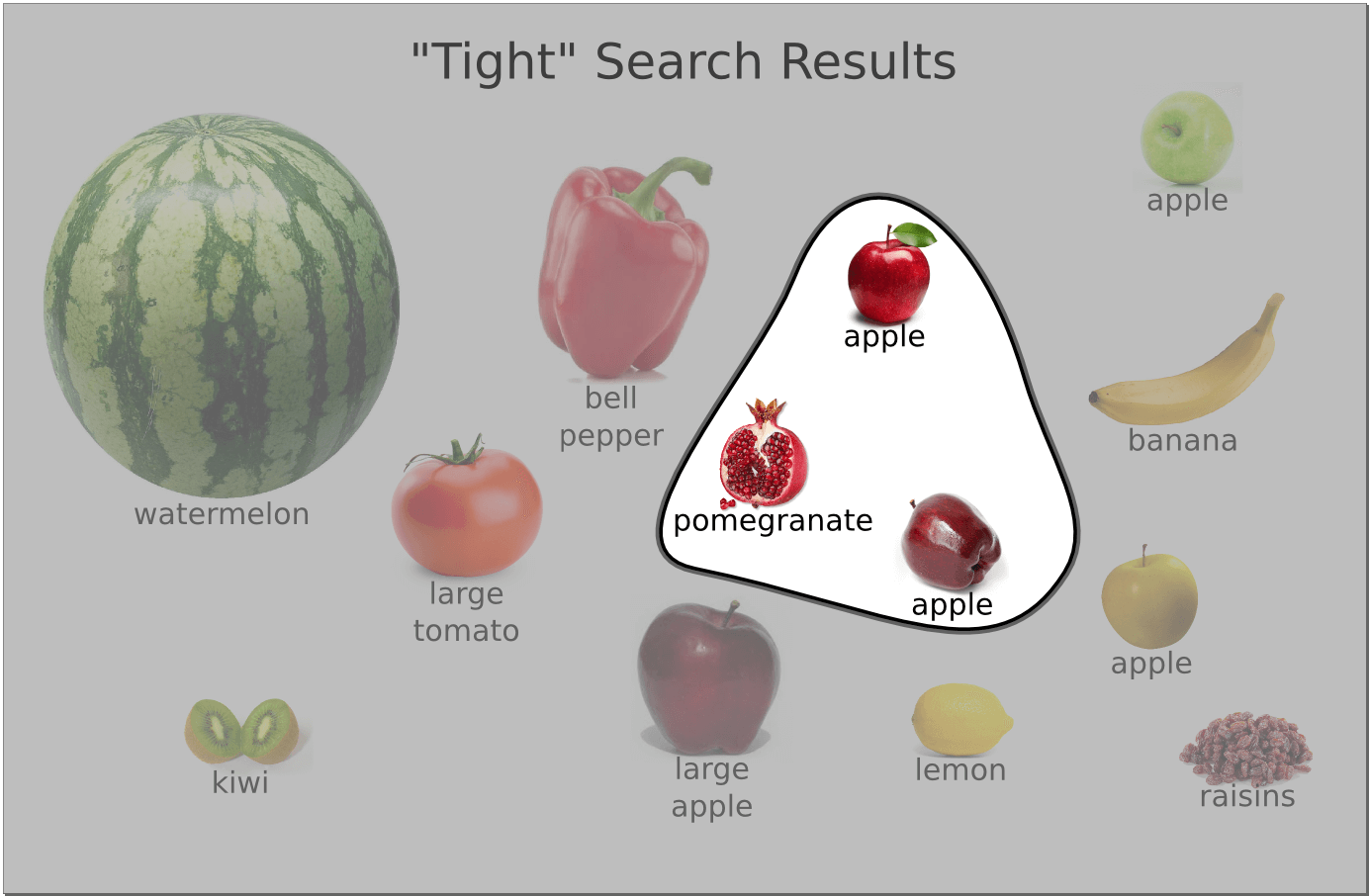

Figure 4.4 Example search result set when tightening the size requirements

Figure 4.4 Example search result set when tightening the size requirements

Let’s go the other way with our experiment. If you tighten the search criteria—for example, by tightening the definition of medium sized, you have results that look like those in figure 4.4. Here precision increases to 67% because you’ve removed two slightly un-medium fruits. But in the process, you’ve also removed a slightly oversized apple, taking recall down to 40%.

Although precision and recall are typically at odds with one another, there’s one possible way to overcome the constraints of this trade-off: more features. For instance, if you include another field in your search, for flavor, then the tomato would be easy to rule out because it’s not sweet at all. But, unfortunately, it’s not always easy to identify new features to pull into search. And in this particular case, if you decided to go around flavor sampling the fruit in order to identify apples, you’d probably soon have an upset produce manager to contend with!

Would you like to learn more?

If you’re interested in more, be sure to grab the book! And get in touch with OSC if you have tough search problems you need help with!