It’s understandable to be frustrated with search. You’ve probably heard someone like OSC lecturing you on relevance best practices, taxonomies, metrics, measurement, and more. You’ve probably thought “wow that’s a lot of work.” Indeed!

An understandable question you might then ask is – “well won’t AI just solve all these problems soon?”

If only – if anything it creates many many new problems.

While there’s a lot of upside to machine learning in search, I do need to warn you. There’s reasons to be wary of introducing machine learning to your search stack! Consider these questions before pulling the trigger on a solution.

Are you ready for the extra cost and complexity?

Beware of switching to a machine learning solution because it “makes things easier/cost less.” Indeed, the opposite is almost always true. Expect it to be harder, more complex, and more expensive (but hopefully with higher payoff). I regularly make arguments to clients why ML solutions should be avoided, trying to help them understand the downsides relative to simpler solutions. Fellow search consultant, Daniel Tunkenlang, lists many of the complexities. Many of the myriad concerns include

- Interpretability – it’s often hard to know why a model or solution is doing what it’s doing

- Maintainability – there’s often many more moving parts in product-ready machine learning solutions

- Garbage in, garbage out – Gathering, cleaning training data and is very time consuming, as is validation that the model is doing what you expect at scale

- Expertise – The experience required to do machine learning well is hard to find and expensive.

The reason to take on a machine learning approach is because the potential outcome is worth the higher investment. There’s a big win to me made. But if you don’t need it, don’t invest in it. There’s often a lot of upside in avoiding machine learning.

How will you know if your machine learning investment is successful?

Another characteristic of great search teams: they are obsessed with measurement and feedback. They do this because search is core to their value, and they absolutely must measure how any change impacts that value.

They have two things down pat:

- KPIs & Instrumentation – can you tie search to a business metric? This is often easier said than done. But wouldn’t it be great if you could brag about how well your investment paid of to the C-level?

- Relevance judgments – a ‘judgment’ encodes how ‘good’ a search result is for a query. There are many ways of coming up with judgments. With a good judgment list, you can compute a sense for whether you positively or negatively impact search before going to production

As my colleague Liz Haubert says in her Haystack talk – the key to all of this is having many, independent sources of measurement – not just one. And definitely not one just tied to your vendor. When it comes to data – never trust, always verify!

Case in point, the two Learning to Rank talks at Haystack Europe spent nearly half the time focused on measurement often with no clear obvious answers of what a relevant search result was for a user’s search. Despite being some of the smartest search people I know; Despite having rich user analytics (clicks, purchases, etc) to draw from AND having expert, internal users grade results. Most distressingly in the TextKernel Learning to Rank talk: the measurement approaches pointed in different directions with ongoing efforts to try to understand why!

Never trust, always verify!

Can you evaluate the AI ‘solution’ as a hypothesis?

If I told you your machine learning solution/project/product/idea was going to fail, which position would you rather be in?

- A 6 Month, $5,000,000 project was run. The effort had a negative impact on conversions and product sales, and was deemed to be a failure.

- A 3 week, $10,000 experiment was run. The failed ‘hypothesis’ was abandoned and the team turned to more promising directions for their experimentation

Even better is the scenario:

- 30 minutes was used to try out an interesting idea on someone’s laptop. The idea was unsuccessful, and everyone decided to go to lunch

The world is rich with ideas that might work. None that are guaranteed to work for you. Ideas might come from what everyone hears that Google is doing. They might come from some crazy academic paper. Or some consulting firm’s blog.

We never ever tell clients that we know how to solve their search relevance. What we have are educated hunches, based on our experience, that should be validated heavily. The real silver bullet is not machine learning, it’s removing barriers to experimentation. It’s to run dozens of experiments quickly – to ‘fail fast’ to find that one gem that’s actually worth investing in.

But “removing barriers to experimentation” is hard work. Most importantly hard organizational work. It’s hard to get bosses and stakeholders to “plan” against an experiment-driven approach to doing work. (More on how to do that in a future blog article). Needless to say it can take years and discipline. I empathize with the predicament many search people find themselves in.

Sadly, despite this predicament, it can’t change the reality that many orgs take on relevance as a ‘project’ sadly ending up with lots of money spent on disappointing results.

What problem, exactly, are you solving? What’s the hypothesis?

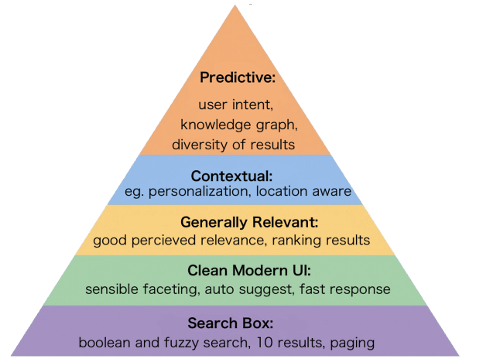

If you care about search, it seems like a good thing to want to progress higher and higher up the levels of maturity. Or as my colleague Eric Pugh puts it the search relevance “hierarchy of needs”.

Moving up the pyramid, you’ll find solutions of increasing complexity. Luckily many are working to end up at “generally relevant” – After moderate tuning, there’s modest improvement in the solution. In other words, basic usage of search engine features improves quality enough (assuming you can measure quality!).

It’s the top two levels almost all organizations stop in my experience. Often for good reason – the last 10% under “contextual” and “predictive” can take years. Is it worth it? Sometimes yes, sometimes not.

The trick is, armed with diagnostic and measurement data, to find those areas where a cutting-edge technical solution makes sense for your domain. Crucially: it’s important to realize that just because a solution works on a problem (even a problem in your domain) it’s very very likely NOT to apply exactly 1-1 to work you’re doing.

In other words, you need to get good at using sound measurement to find hypothesis where search is lacking. Is the key issue challenging domain-specific misspellings, for example? Is it, like in Snag’s use case, that users don’t use a lot of keywords and instead expect hybrid search & recommendations? Is it, like in Wikipedia’s use case, where users hitting search are almost always the weird exceptions where an exact title search doesn’t match?

The trick is you can’t boil the ocean. You have to move up this pyramid strategically. Use insights into how your users behave to find those areas that are worthwhile for investment.

Again we’re back to knowing, really really knowing, and double, triple, quadruple checking that you have the data to understand how users are searching. You should ask a lot of questions anytime you see a graph that just shows “we tried X and search got better”. How did they measure that? What was their method? Was it confirmed by other approaches?

How confident are you in the training data you need to solve that problem?

Training data are the examples used to show your model the ‘right’ answer.

Consider texting. If you were building machine learning to predict the word being typed in a text message, you would first need many (and I mean a lot) of text messages. You’d want original, poorly formatted text messages. You’d also want corresponding completions or corrections.

Obtaining all of this is itself comes with many interesting decisions. Do you make assumptions about how people text, and use that to construct the training data? Or try to be more “pure”. Perhaps looking at how users complete or correct strings in texts? Ie If you see “serach” corrected to “search” by a lot of users that’s a hint it’s a correction, and thus it should be flagged as a correction?

Getting these decisions right are far far more important to a successful machine learning project than picking the algorithm to use. And training data really is just another kind of test data. Again, to hammer home the measurement and testing theme, this is something to obsess over – garbage in, garbage out. Many orgs spend a lot of time on machine learning, only to learn too late that the training data is poor or they can’t obtain the needed training data.

How’s your orgs AI literacy?

In conclusion, it’s easy to become awed by the results of some machine learning demo. I know, I’ve been awed myself! But many of us who have been through it have a secret to tell you: it’s just math. It’s not robots coming to take over the world. Even better – often its shockingly simple math that’s effective, not some obscure deep learning model you’ll find in the latest paper.

That’s good news – once you do learn this stuff, there’s plenty of room for innovation. Just check out my Activate talk. Learning how the math works takes a lot of the intimidation out of machine learning. Just see the range of Haystack talks where some kind of ‘machine learning’ is used – but the term itself is barely mentioned. Instead the approach is just treated as another approach, not intrinsically better because it is associated with machine learning.

The hard work hidden in these questions is organizational discipline about measurement, feedback, testing, and experimentation. That’s the work that takes years – and it transcends technology.

Our mission is to empower organizations to be able to do this. If you’d like help either through training or consulting get in touch!