“If you can not measure it, you can not improve it.”

Lord Kelvin (attributed)

Just as Continuous Integration and Continuous Deployment (CI/CD) enable a testing regime that minimizes the introduction of bugs into production, Continuous Experimentation (CE) provides a protocol for measuring search performance with respect to user satisfaction and business goals. Adhering to this process enables both monitoring for performance drift over time, and evaluating the impact of proposed changes prior to deploying them in an A/B testing context.

The Problem

- Search is hard. Improving the performance of your search system is hard. Even a simple collection, say one with five fields, has a large number of alternative search configurations. Just choosing 1, 2, 3, 4, or 5 fields involves 31 possible combinations. Now add a further task of tuning boost values on those 31 field variations and we produce a pool of possible experiments that is effectively infinite. This is a complexity that needs to be managed.

- Relevance tuning is hard. For each anecdotal problem, there is likely a solution that resolves the specific anecdote while damaging performance on other queries. Contrary to the wisdom of the internet, the plural of anecdote actually is data. Given enough anecdotes, the broader classes of relevance problems can be identified. This is a second complexity that needs to be managed.

- Experimentation is hard. There are numerous things that have to be decided for any experiment. What is the hypothesis? What will the hypothesis be tested against? Which collection(s)? How will performance be measured? Are the metrics from on explicit or implicit judgments? How much will it cost? How long will it take? Given a potentially infinite set of experiments, how can they be prioritized? This is a third complexity to be managed.

Continuous Experimentation (CE) is a protocol designed to seamlessly incorporate experimentation and evaluation into existing CI/CD infrastructure, combined with a prioritization model for ordering the experimental queue.

The Solution

Generations of software engineers have learned to manage complexity through abstraction. They have learned to manage complexity through estimation models. They have learned to manage complexity through the use of automated testing, at both the unit and integration levels. The hallmark of the great software engineer is a unique form of laziness: never do a process by hand that can be automated, and let the computer do the heavy lifting.

In order to manage these complexities, we employ a tripod, the model of stability and support. Each of the legs addresses one of the complexities. We manage tuning complexity and experiment options through abstraction on the queries and possible solutions. We manage the prioritization of candidate experiments via a simple mathematical model. The final leg is automation, enabling end to end evaluation of recurring and one-off experiments.

The Process

Relevance Triage

Relevance problems are generally identified anecdotally. “I ran this search, and it stinks…” is a familiar phrase to every relevance engineer. The goal of relevance triage is to take those disparate anecdotes and collect them into meaningful classes of relevance problems that can be prioritized. The table below provides a reasonable template for collecting together anecdotes, with some examples. It contains numerous possible examples, but is in no way an exhaustive list. An additional column could be added for notes.

Filling in this template is a tedious process. As anecdotes are collected, you look for where to put each new one, taking advantage of the human ability to recognize patterns. This is a bit of a black art, but does lend itself to some automated analyses. Knowing if your anecdotal query is a head, shoulder, or tail query is helpful, and knowing the user persona goals can also help collect similar anecdotes together.

It is important to realize that triage into Relevance Problem Classes will reduce the number of moles to whack, but won’t prevent Whac-A-Mole development. To make continuous performance improvements requires a more structured approach, employing empirical methods to evaluate each possible solution.

| Name | Description | Exemplars | Possible solutions |

| Data missing | A field, or field content is missing from one or more data items | Improve the upstream data curation process. Change the data on a case by case basis | |

| Data issues | The data is incorrect | ||

| Overly restrictive matching | A multi-term query fails due to extra, possibly non-content bearing terms | ||

| Overly restrictive phrase matching | Queries fail to match when missing some terms or presented out of order | ||

| Stemming issues | Sometimes Porter can be too aggressive as a stemmer. Sometimes stems don’t match when they should | ||

| Synonym issues | Sometimes we need a synonym, sometimes we don’t | ||

| Ranking vs relevance issues | Most relevant content vs freshest content |

Experiment Triage

RICE

Reach Impact Confidence Effort is a model for prioritizing business activities, using a simple formula:

RICE = \dfrac{RIC}{E}The dimensions are:

- Reach – How many users will this have an effect on?

- Impact – How much will this improve the experience for a single user?

- Confidence – How sure are you of success?

- Effort – How long will it take, estimated in person months.

To perform experiment triage, we use a modified version of the model, Win, Impact, Learning, Effort (WILE):

WILE = \dfrac{WIL}{E}- Win – The probability of success, analogous to confidence

- Impact – The same as in RICE

- Learning – What will we know on completion of the experiment?

- Effort – The same as in RICE

In general, experimentation is a tactical activity, and as such, the strategic value, in terms of reach, is a less important factor. The ratio does provide a reasonable metric for prioritization.

Collecting together each experiment with its configuration and its WILE score allows straightforward prioritization. One possible form is the spreadsheet based tabular format, below.

Experimental Template

Download a template from Continuous-Experiment-Template.xlsx

Each of the columns covers specifics of the experiment to be used in estimating the WILE score.

- Experiment – The name of the experiment and a link to its issue, e.g. a Jira ticket or GitHub issue.

- Problem Class – The problem class the experiment will operate on, as defined in Relevance Triage above.

- Severity/Frequency/Impact – A qualitative assessment of the problem: is it a pet query, a head query, a tail query.

- Vertical or product class – If search is used in multiple products or verticals, which is being evaluated.

- Offline metric – What score, such as nDCG@10, ERR, etc, or KPI will be used to evaluate the performance of the experiment.

- Data Set with Ratings – What test data will be used.

- Ready for Execution – Can the experiment be deployed immediately? Are there constraints that need to be met first?

- Hypothesis – What do we hope to learn, what did we learn.

- WILE scoring columns and computed score for the sorting

- Insights

- Completion Date

Experiment Automation

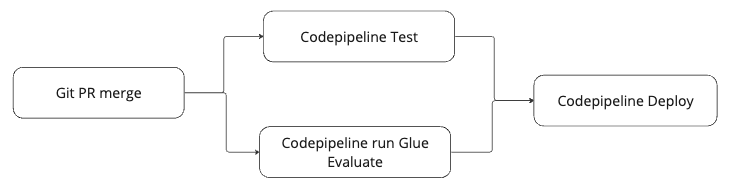

To bring all the pieces together, we incorporate experimental evaluation into our CI/CD pipeline. For each deploy, at a minimum, the baseline query set and relevance judgments are tested against the default metric, such as nDCG@10. This serves as another canary in the mine, alongside the functional and end to end tests. That same experimental pipeline component should have configurable instances where the specifics of the experiment to run, including query set, evaluation measures, etc are instantiated.

Using the prioritization by WILE score, a queue of experiments is created. Alongside the CI/CD driven experimental runs, each experiment is processed in order. Scheduling of such jobs can be performed to control resource consumption. The offline experimentation lab becomes an ever turning wheel, churning out performance results. From there, each new candidate improvement is measured.

The general form of the pipeline, viewed as an Amazon code pipeline is straightforward. On any update to the indexes or the search API, a number of specific jobs are run to perform the evaluation. The pipeline can also be configured to run on demand, or on a schedule, providing continuous monitoring of performance:

The evaluation framework consists of the following parameters:

- One or more query templates (verticals) to test

- DEV, STG, PRD designation

- Query collection to use, includes:

- query file

- query ID map

- relevance judgements

- expected results

- desired metrics:

- ERR

- nDCG

- MAP

- P

- Average Age

Expected results are compared to actual results. Differences greater than some epsilon are flagged, just like a failing test, for review.

The evaluation framework consists of the following parameters:

- One or more query templates (verticals) to run

- DEV, STG, PRD designation

- Query collection to use, includes:

- query file

- query ID map

- relevance judgements

- expected results

- desired metrics:

- ERR

- nDCG

- MAP

- P

- Average Age

Nightly automated pull appends results to a dataframe (spreadsheet) with a new column for the date. Facilitates tracking performance over time. If actual results are different from expected, the nightly pull acts as a canary to flag the day for review.

Conclusion

“If you can’t explain it simply, you don’t understand it well enough”

Albert Einstein

The corollary to this: once you do understand something well enough, the explanation becomes simple. We have known for centuries the value of controlled experimentation in advancing our understanding of the universe. As software engineers, we know the value of testing and continuous integration and deployment. None of these notions are new.

It clearly follows that all that we know can easily be applied to the problem of search result quality evaluation, leading to a process of continuous experimentation. Taking the reins of the experimentation carriage out of the hands of individual relevance engineers and connecting them to an autopilot reduces the burden on those engineers. This allows them more time to do what they do best, apply the results of experimentation to the continuous improvement of search.

If you need help creating systems and processes for continuous improvement of search result quality, get in touch.

Image from Experiment Vectors by Vecteezy