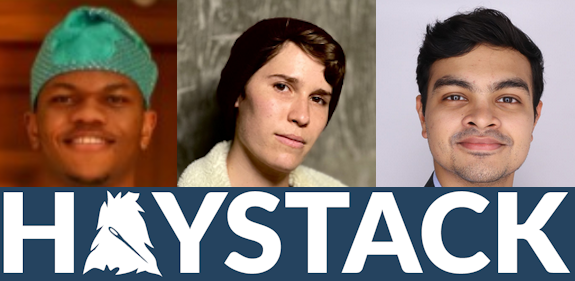

A guest blog by Habeeb Shopeju, Maya Ziv and Ujjwal Maheshwari

The 2024 edition of Haystack US, The Search Relevance Conference took place in Charlottesville, Virginia on the week of April 22nd, 2024, and it was an immense success. The event had 20 talks across two days with speakers and attendees from all across the globe. Haystack had 309 attendees – 122 were present in person and 187 joined virtually. Three of the attendees were recipients of the Hughes Scholarship organised in memory of Simon Hughes, who was a regular attendee and speaker at Haystack. The winners were Habeeb Shopeju, Maya Ziv and Ujjwal Maheshwari. Aside from attending the conference, the recipients also got a free registration for any of OSC’s “Think Like a Relevance Engineer” or “Hello LTR” online training courses and $1000 in Vectara credits. Our thanks go to OpenSource Connections and Vectara for sponsoring the awards.

In this article, the recipients share their experience from participating in Haystack 2024, their favourite talks, new things learnt and take a stab at what some of the main topics at the next edition will be. Without further ado, let’s hear from Habeeb, Maya and Ujjwal.

What one new thing did I learn at the event?

Habeeb

The use of AI judgements in production is something new I learnt at the event. I know that one can prompt large language models to make relevance judgements for search applications. However, the talk “Chat With Your Data – A Practical Guide to Production RAG Applications” by Jeff Capobianco was the first time I am seeing it being applied in production, as more than just a clever idea.

The idea discussed by Jeff was that you check for an agreement between the large language models and the experts regarding their relevance judgements. You do this by getting relevance judgements from the large language models and the experts, then calculating the Cohen’s Kappa score.

An agreement means that you can use the large language models for getting relevance judgements in the rest of your search project, provided the domain remains the same. Since this is an experiment, there is no guarantee of an agreement, but there is a potential for cost and time savings if there is an agreement.

Maya

I learned so much at this year’s Haystack – what an incredibly information dense and fascinating series of talks! Honestly, the nugget of wisdom that stuck with me the most is that it’s okay to cheat – simple, more manual strategies like pinning results for popular queries, mapping out synonyms, and building lists of special search terms (like brands) can protect you from over-indexing on your favorite/most visible pet searches, to the detriment of the long tail of queries your users make. My team is really small and has to move quickly, so we tend to go straight to new technology to solve problems in search, but the insight of how far a little manual work can go for making search feel responsive and intuitive really landed for me.

Ujjwal

One new thing I learned at the event was not to underestimate LLMs. This insight came from Ali Rokni’s talk. Generally, when considering the use of LLMs, most industry professionals think about building a RAG (retrieval-augmented generation) system. However, Ali and his team at Yelp chose a different approach: using LLMs for query understanding. This was a significant revelation to everyone in attendance, as no one had considered using LLMs as a preliminary step to searching, rather than following it, as is typical in a RAG system. Moreover, the idea of generating and caching the head queries offline using powerful models like GPT-4 and using smaller models like BERT for tail queries in real time was an ingenious idea. This talk really opened up a world of possibilities for what could be achieved with LLMs!

Which was my favourite talk?

Habeeb

My favourite talk from the event was “Exploring dense vector search at scale” by Tom Burgmans & Mohit Sidana. The presenters researched various components of Solr and how they affect query latency. It was information-dense, but I learnt something from the presentation and genuinely appreciate the work put in to try out the various questions and observe how Solr responds. There are a couple of ideas from that talk that I plan to further look into using OpenSearch such as using byte instead of float32 for the vector embedding; also the relationship between the number of filters and query latency.

Maya

There were too many excellent talks to choose from! I think my top 3 were “LTR: A Project Retro” from the Reddit team, “Personalizing search using multimodal latent behavioral embeddings”, and “Search query understanding with LLMs: from ideation to production”. All three were absolutely chock full of wisdom and practical experience, and I loved that they followed real, concrete use cases that felt easy for me to translate to my own search problem. Honorable mention goes to “Expanding RAG to incorporate multimodal capabilities” from the team at OpenSearch – watching that talk felt like peering two years into the future, and it was pretty crazy. Brave live demo!

Ujjwal

My favorite talk at Haystack 2024 was “Learning to Rank” by Team Reddit. The presentation provided attendees with a glimpse of what it takes to produce an excellent search experience for users at Reddit’s scale. It was impressive to see that aspects such as backend engineering and infrastructure were given as much importance as the learning-to-rank model itself. This talk was a great example of how search relevance engineers rely heavily on collaboration with other engineering disciplines to achieve their goals.

What was my experience with the search community at the event?

Habeeb

While I could not physically take part in the event, the search community has been quite welcoming. I could interact with some interesting individuals via Zoom during the presentations and learn from the questions they asked. Interestingly, I set up calls with some speakers and attendees to network after the event despite not being physically present. These are signs that the community is genuinely open to sharing knowledge, helping others and growing.

Maya

What an incredibly kind and welcoming community! It’s always a bit of a gamble to go to a conference by yourself (especially one across the country, as I’m based in the Bay area!) but I felt so completely at home with the people of Haystack. Everyone was so friendly and so excited to talk about what I was working on – getting to compare notes with other search practitioners working on similar search problems to mine was a profoundly valuable experience. I found myself often pulling out my phone to take notes as we talked! The density of expertise at Haystack was such an amazing resource, and I’m so grateful that everyone was so willing and excited to share their experience and imagine what’s possible.

Ujjwal

For me, the highlight of Haystack was meeting the search community. As a newcomer to the industry, I found the community members very welcoming. Each conversation was enlightening, teaching me something new or offering a fresh perspective on something I already knew. I’ve told my friends that the amount of knowledge gained per minute of conversation at this conference was incredibly high! This experience made attending the event especially valuable for me.

What will be some of the main topics at the next edition of Haystack?

Habeeb

I think that next year more of the conversations will tend towards the efficient use of language models in search. My guess is that the use of small language models will gradually become a hot topic as language models are being used for more domain-specific search cases. This year’s edition had lots of RAG-themed talks and this is an indication that the idea of using language models in search applications works. The problem of the latency and costs of using language models still exists, so I expect that some attention will go in this direction.

Ujjwal

This year, many talks focused on proof-of-concept variations of RAG. However, very few of these techniques have been deployed in production or have contributed to revenue generation or enhanced customer engagement. I believe that next year’s Haystack conference will feature many talks on multimodal RAG concepts that have been successfully implemented in production, along with the lessons learned from these deployments. Additionally, I expect to see some innovative ideas related to relevance and retrieval specifically focused on RAG.

Maya

I definitely see more LLM content on the horizon, especially techniques for avoiding hallucination, caching techniques to make LLMs fast enough to be useful in a production context, and comparisons between major providers / open source models. That one’s pretty obvious – but my bolder prediction is that we’re going to see talks discussing litigation of the major search engines (the Google monopoly case has been fascinating) and generally some reflection and retrospection on web search.

Conclusion

There you have it, the thoughts of the recipients of the Hughes Scholarship for the Search Relevance Conference 2024. You can join the search community through Relevance Slack, learn about various search technologies and also network with the amazing people of the search community. Videos and slides from all this year’s talks will be published soon on the OpenSource Connections Youtube channel. Haystack US will return in 2025 and the dates for the European edition will be announced soon, join OpenSource Connection’s event mailing list for updates.

Haystack US 2024 was brought to you by OpenSource Connections, Experts in Search & AI, offering consulting, training and specialist staffing – talk to us about your search & AI project.