Does anyone else remember the Segway?

Segway was billed as the most revolutionary transportation innovation, well, ever. We would use it instead of cars; instead of biking and walking. To quote a Wired article on Segway:

Before it launched, the Segway was said to revolutionize the way cities are laid out and how people get around them. Venture capitalist John Doerr predicted it would reach $1 billion in sales faster than any company in history, and that it could be bigger than the Internet.

Suffice it to say, that didn’t happen. Aside from mall cops and tourists, there wasn’t much of a market for the Segway. The market, for whatever reason, said “no thanks!”.

What does this have to do with search?

Search teams have lots of “Segways” happening all the time: supposed innovations, ideas, new products, etc., that will improve search for that company. Yet after all is said and done, after it’s deployed, after budget is invested, the promised returns don’t materialize. Why?

Feedback Debt

What is the reason behind these expensive failures? It’s something I refer to as feedback debt.

Feedback debt is investment in functionality without good evidence to support expected business outcomes. If you deeply invest in an idea, without acquiring early, high-quality, objective evidence that demonstrates that idea’s expected outcomes, it will be a wasted investment. Sounds self-evident, but very often we can end up in a sunk cost fallacy. Simply because we invested in it, we must deepen our investment. Nevermind the lack of feedback substantiating the promised outcomes.

But we often don’t see that we have a lack of evidence. We can be blinded by our own hype. It’s much harder to question our assumptions with humility and paranoia. Frankly, it’s yet harder to develop an organizational culture that accepts experimentation, and the associated failures/negative results, as par for the course.

Yet even the best-meaning, most humble search teams can find themselves accruing high amounts of feedback debt. And I think it has something to do with how we develop search products. Typical development processes emphasize feature correctness and don’t think about usefulness. Correctness means we reliably built the product to some specification. All the tests pass. It’s fast. It won’t crash. The Segway indeed balances and rolls around. It does what was promised.

Usefulness, on the other hand, gets at whether the Segway will be valued by customers. We can assert, confidently, and with evidence, that specified functionality helps. Measuring correctness helps us overcome technical debt. Measuring usefulness helps us overcome feedback debt

Feedback Rich vs Feedback Blind Products

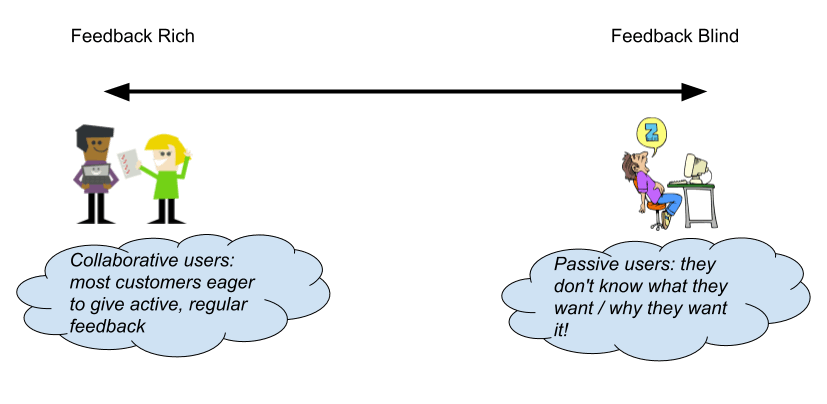

When I started my career at a government contractor, our product had very engaged customers. These customers helped fill our product backlog with ideas, bugs, and requested improvements. To them, the software was mission critical. It had to work fast, well, and reliably. These kinds of active customers want to participate: telling you what they needed from the product.

I call these feedback rich environments: we have a strong feedback signal from our vocal customer base. We can focus on building reliable, well-tested software that meets their needs. We have little feedback debt given our open lines of communication between ourselves and the customer.

However, most consumer-facing search applications are not mission critical to users. There are very few open lines of communication. We rarely just have a dozen users eager to collaborate with our organization. Instead, we have millions of passive users who can barely give us the time of day. Even if you could gather vocal users for feedback, they wouldn’t be statistically representative.

These scenarios are feedback blind environments. Here, we are faced with a dramatic and stark lack of feedback. We truly do fly blindly. We have no idea on usefulness. Yet we build software in the same way, with the same practices, as in my feedback rich, government contractor example. We code to someone’s specification, not to customer usefulness.

Learning to fly on instruments

When feedback blind, hope is not lost. Search teams can and do succeed! But their development posture must be quite different from the feedback rich. Successful teams focus heavily on instrumentation, not just features. We build instrumentation to avoid, as early as possible, Segway-like failures. Instead, we can have little ‘stub your toe’ sized failures. Little annoyances that point at a bad idea early, before anyone wrote a single unit test, performed an extensive code review, or invested heavily in scalability.

What kind of instrumentation might a feedback blind organization build? Just to name a few (each of these deserves its own article!).

- A/B Testing: Search A/B Testing is an art in-and-of itself. One technique interleaving can help by weaving together the results of two ranking algorithms into a single search results page, to determine which strategy is better succeeding at your KPIs

- Click Models that analyze how users interact with the search results page, studying how click behaviors indicate relevance for search results

- Measuring diversity of search results — where our search strategy is not just about relevance, but how our results cover a broad range of reasonable answers to a user’s perhaps ambiguous query

- Measuring intent detection — after we make some assumptions of the query intent, how often does the user correct the search system’s interpretation? How successful is that interpretation? For example, did we prefilter to the right category correctly? Or understand that ‘screwdriver’ was a tool, not a cocktail?

- Trying to understand user retention through your search system’s behavior, not just the search’s effectiveness in driving conversions. You want customers that come back!

Many of these strategies require a tremendous amount of care. They depend on the domain and the level of available instrumentation. Finding the right instrumentation strategy to overcome feedback debt can be challenging. Every problem has subtle differences. Getting started from nothing is a careful art.

Test Driven Relevancy

We know we don’t want to scale up correctness if we know an idea is going to fail. Then we just end up building a Segway: many resources wasted on an ultimate failure. We also wouldn’t want to do the opposite either: we don’t want to make something theoretically useful that doesn’t actually work correctly. So what we need to do is scale up usefulness and correctness together, carefully calibrating our investment.

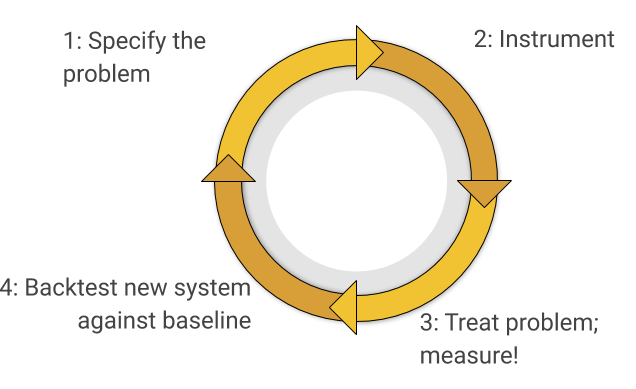

If we assume we have a broad issue, like “intent detection” or “question answering accuracy” in all of these cases, we would pretty much follow the same iterative workflow, shown in the figure below:

- Design a sound, cost-effective strategy for gathering feedback for this component of the search application

- Build and/or gather instrumentation to evaluate that component of the application

- Treat the issue with a proposed fix

- Backtest this part of the search application given past instrumentation (GOTO 1, improve maturity, accuracy and rate of experimentation)

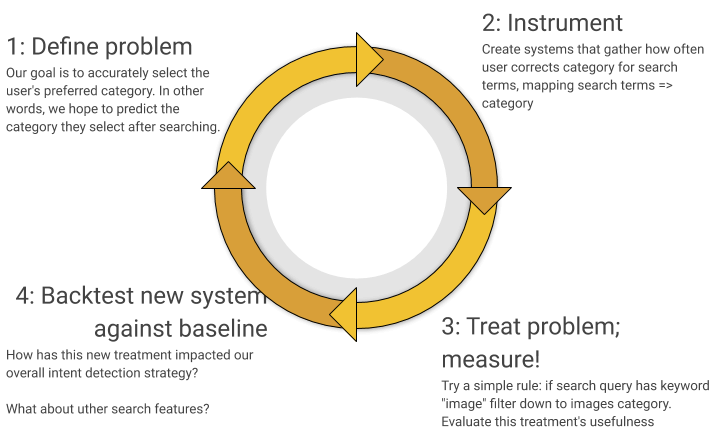

Here’s an example: Let’s say your goal was to improve “intent detection”. Intent detection here means accurately detecting the user’s category (such as how Google automatically changes to Google images if it expects that’s your intent). Then we might setup a process like the following:

Most search teams, in my experience, just do step 3. They don’t think deeply about steps 1,2, and 4, which help to get at whether the feature would be useful to users. They begin building the Cadillac version of their feature instead of throwing something together with spare parts to see how it rides for their customers.

If anyone does steps 1,2, and 4, it might be a data science team. It’s often outsourced to them. But this too is also often suboptimal for a number of reasons:

- The whole culture needs to be reoriented to think scientifically, with hypotheses that can be evaluated. This isn’t a special role data scientists should be playing.

- Data scientists’ unique role ought to be first & foremost to improve the evaluation methodology, but they can’t do this without product expertise.

- Product people often have the deepest insight into the problem, and should guide solutions and approaches. They have to be tightly involved with this process or value is being missed.

- If any “active management” of the solution needs to occur (such as with a knowledge graph or other human-in-the-loop machine learning), product people are best suited with their domain expertise to be the human in the loop.

- Similarly, search relevance engineers can work with data scientists to give them feedback on the likelihood that a given idea can be implemented in a fast, performant way.

More than one instrumentation strategy

If you look at my naive methodology above, the million dollar question should be:

“How do you know if that’s a good way of measuring intent detection?”

Well, I really don’t! It seems like a place to get started, and likely better than nothing. But a humble and paranoid search team, eager for feedback, is always improving their methodology. A healthy pattern I’ve seen is siloing methods of measurement somewhat. You have a team that just does A/B testing. Another that just does engagement-based relevance testing, and so forth. This removes the possibility for groupthink, and opens up to a diverse range of measurement options.

Embracing Failure…

There is a silver bullet for search. A cultural shift towards experimentation that helps us iterate quickly on our ideas. But this shift isn’t something just for the data science team, it’s for the whole organization. Internalized at every level.

To make progress, we need to celebrate racking up failures! If our instrumentation is good, and we know some idea truly and honestly failed, then that’s a success! Negative scientific knowledge about our customers is knowledge too, and can help guide future experimentation.

It takes a healthy culture to eliminate feedback debt. Using data to objectively evaluate a high-status person’s ideas can be scary. Yet to get to an environment where search is solvable, we must embrace a very high level of organizational safety. We need to feel OK failing and experimenting.