As a contributor to AI Powered Search I’m struck by how often organizational, rather than technical, challenges stymie machine learning adoption. Let’s explore one of those challenges: silos between the disciplines implementing search, leading to breakdowns in communication, to office politics, finger-pointing, and ultimately the dark side…

Good Search Teams Make Tradeoffs Early and Often

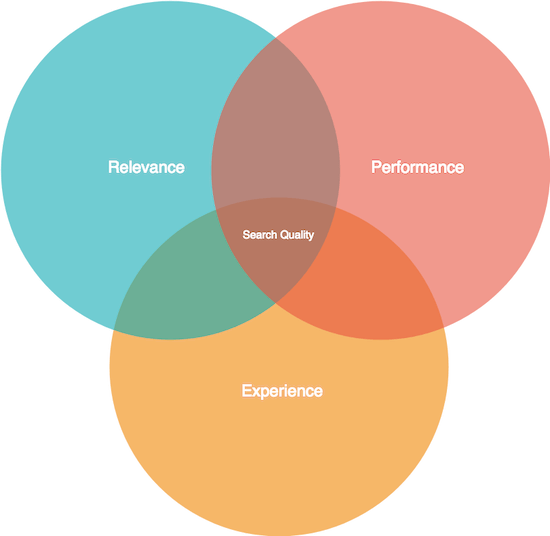

Max Irwin introduced the idea of Search Quality: a balance between three search quality factors: relevance, performance (& stability), and experience (the UI), as shown below:

These factors can roughly be mapped to different disciplines:performance/stability owned by engineering, experience by UX/design, and relevance by data science.

Ideally, your team would optimize every factor. In reality, you have limited resources. You must make hard tradeoffs. Further, it’s a game of whack-a-mole. A minor increase in search relevance might cause performance to plummet. A change in the document display might cause users to interact with those more, ultimately impacting relevance. Finding the right tradeoff between these factors (and budget!) early separates great search teams from the rest.

A minor increase in search relevance might cause performance to plummet. A change in the document display might cause users to interact with those more, ultimately impacting relevance

Engineering vs Data Science | Performance vs Relevance Tradeoffs

Do your engineers understand your data scientists requirements early enough (and vice versa)? Or do they only realize after months of siloed work that they built the wrong thing?

Sometimes a data science team jumps to bleeding edge research without considering engineering realities. Engineers, however, often build a tank: fast, reliable, packing firepower, and impervious. Yet tanks are inflexible and hard to evolve. Perhaps they should build a bicycle first — still faster than walking and easy to experiment with parts.

Famously, the winner of the Netflix Recommender Prize squeezed every bit of accuracy from the underlying data. Yet the complexity of the implementation made it difficult for Netflix engineers to reliably put into production due to engineering costs. Did Netflix really then recoup its investment in the prize? Was the winning team focused too much on bleeding edge solutions? Or was Netflix infrastructure too much of a “tank”? Optimized for what it did well, too inflexible to adopt the new approaches? How could early collaboration here have led to a better investment?

Similarly, there have been great results from transformer models in search. Yet the engineering behind deploying these solutions is not as simple or ubiquitous as boring old text matching. Only now is some of the engineering required coming to mainstream search engines. Implementing these capabilities today requires early, frequent data science and engineering collaboration to do well.

UX vs Data Science | Experience vs Relevance Tradeoffs

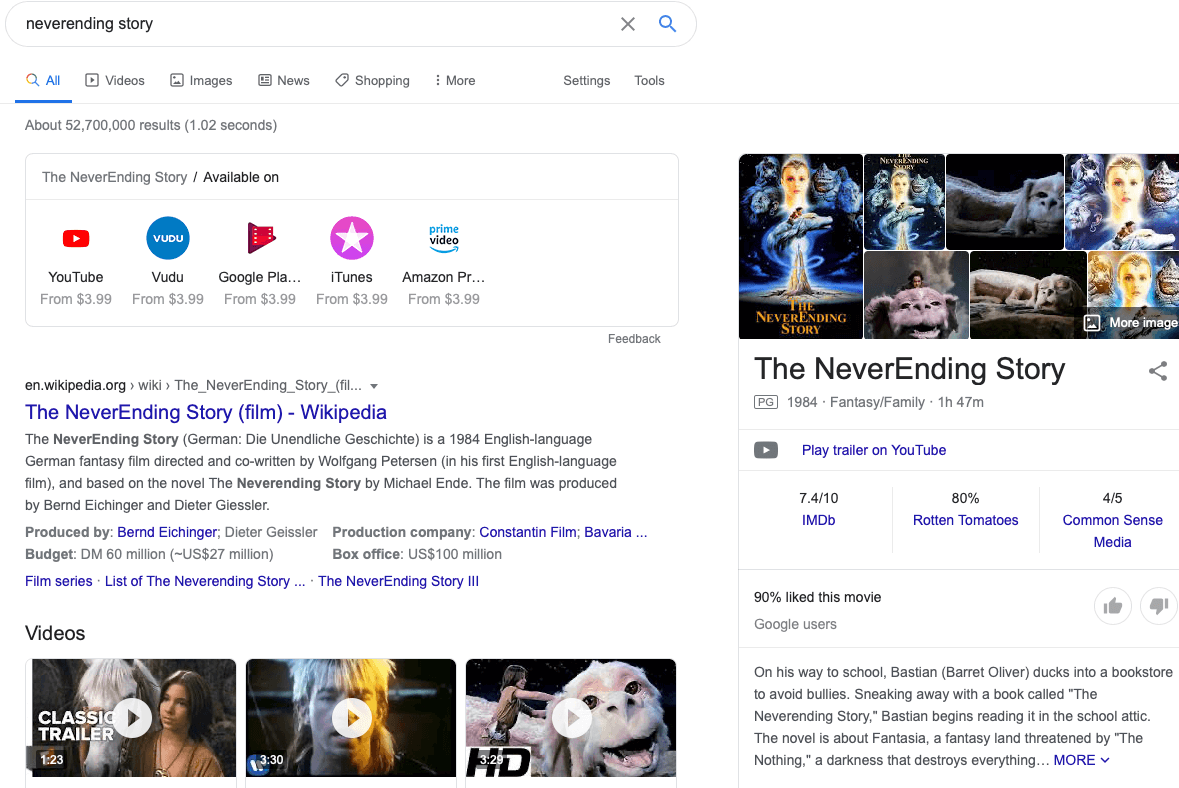

More sophisticated search UIs create more complex data science problems. A search page that once had ‘10 hot links’ is now increasingly made up of many little search UIs, each reflecting the users possible intent. Consider the Google search for “Neverending Story” below:

From this screenshot, Google has inferred several intentions

- Information on Neverending Story (right sidebar – wikipedia article)

- Watching Neverending Story (upper left ‘Available On’)

- Watching clips from Neverending Story (list of ‘Videos’)

Search is increasingly many intent-targeted widgets weaved together. I think it’s a good pattern to follow. Yet trying to recreate everything Google does won’t work without a Google-sized budget.

A siloed search UI team can build great wireframes, promising to implement all kinds of things like question-answering cards, automatic intent detection, and more. Only later when the backend is implemented is it clear nobody told the engineers or data scientists this was a requirement.

At the same time, data scientists need to be flexible in their own thinking. Many Kaggle-like search tasks assume purely optimizing “10 hot links” or “get the right answer”. But ‘search on your product’ is not a Kaggle competition. It’s heavily dictated by how users interact with an evolving UI design in your specific domain, for your specific user base and product.

Without frequent collaboration, we can fall prey to this Charles Babbage quote:

On two occasions I have been asked, “Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?” … I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question.

For the data scientist, it’s the ‘wrong input’ of misunderstanding user behaviors on a custom, domain-specific app. For a UI designer, it’s not appreciating the ‘inputs’ to their beautiful wireframes might be wrong. Only if they talk early might they get close to the right figures.

The ideal search team structure for AI Powered Search?

Quick, build your search dream team! Would you prefer:

- Any three people on your team, organized however you want? OR

- Nine people on your team, but they had to be subdivided into teams by discipline (an engineering team, data science team, etc…), each accountable for the corresponding search factor (engineering-perf, data science-relevance, etc…)?

Well org structures are not always straight-forward (there are more than two options!)

Given my druthers I would take (1) the team of 3, one of each discipline. I would hold them accountable for search quality. I’d do this because they can usually make tradeoffs & decisions without me getting involved.

Alternatively I would do (2) but cheat. Despite official team structures, individuals would become a single de-facto agile team, organized around a single backlog. Each team member then represents the interests of their org in an effort that requires the input of both orgs. I see some organizations do this well, such as those where one discipline is a shared service across many internal customers.

A ‘pure’ scenario (2) can create disasters. If fully isolated, it can take months to realize each group’s solutions don’t satisfy the other’s requirements. Their solutions will collide like sunk cost trap freight trains. Teams will experience heated conflict, as they’re each unable to meet their individual goals without the others’ work. Yet no group will easily bend to the demands of others. These issues will create needless politics, spending a tremendous amount, delivering very little.

Where Silos are OK

Silos can be a feature, not a bug, when we need to eliminate groupthink.

In relevance, this can happen with search relevance evaluation. We might want to get independent reads on the quality of search. So it doesn’t hurt to create a team per methodology: human relevance, A/B testing, analytics-based relevance, etc. They might measure slightly different things, helping us learn more about where our solutions are successful or unsuccessful.

If you’d like to talk about AI Powered Search with me and Trey Grainger of Lucidworks, come and join our Ask Me Anything session on 8th June as part of the combined Berlin Buzzwords/Haystack/MICES conference week — tickets are on sale now.