Summary

Search relevance expert Doug Turnbull revisits the concept of search relevance, exploring why people disagree on what makes results relevant. Using “Rocky” vs “Rocky Horror Picture Show” as an example, he demonstrate how subjective relevance judgments can be. Doug argues that relevance isn’t just about matching queries to documents, but about helping users progress through their search journey—from passive browsing to committed action. Users often start with incomplete information needs and learn what they want through searching. True relevance means presenting contrasting options that help users make decisions and move forward in their process, whether buying, applying, or researching.

Introduction

Five years ago, I wrote an article called What is Search Relevance?. Back then, I had to shout to convince people to even notice whether search results were accurate or not. On OSC’s app development projects, I often urgently raised my hand trying to get anyone to take seriously the thought that “look these search results clearly have nothing to do with this query!!”

Now I feel the awareness battle has been won. After many more years of my personal experience, I want to take a more thoughtful look than my previous article. Let’s dig into what we mean. What is relevance really? What do we mean when we say this or that result is relevant or irrelevant?

Two People Walk Into a Search Bar

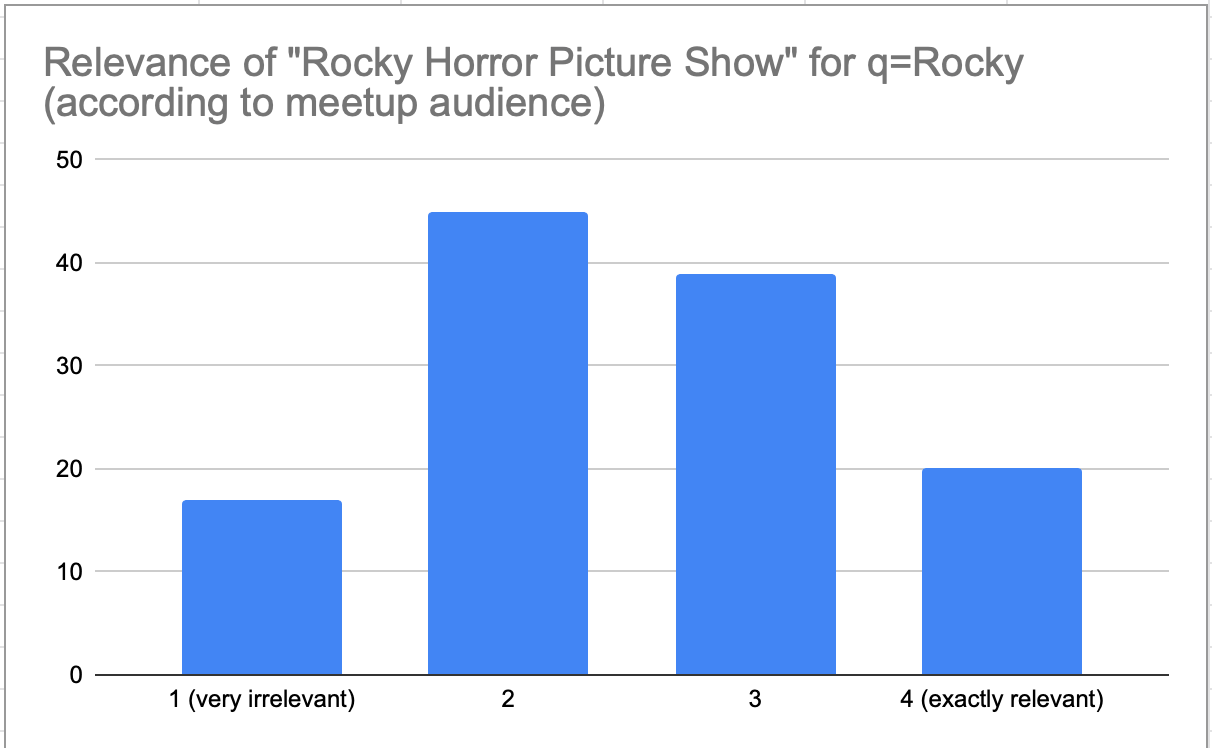

It’s amazing how two people can take a simple query, say “Rocky” in a movie search, look at a document, say “Rocky Horror Picture Show” and argue over the movie’s relevance or irrelevance. Recently, I asked a Meetup audience of about 50 folks to rate the relevance of “Rocky Horror Picture Show” was for a “rocky” from 1-4. I got surprisingly varying results, as shown in the graph below:

If you dig into why this is so, you get various explanations and justifications:

- Rocky is mentioned in the title, perhaps the user hasn’t typed their full query in yet? Both begin with “rocky” …

- Sure it’s not as relevant as “Rocky” movies, but it’s still one of the few movies that mention the term “Rocky” in the title, so it’s at least a “2 or 3”!

- The user clearly wants the movie “Rocky”, there’s little ambiguity, so show “Rocky” movies, Rocky Horror is clearly irrelevant

- Anecdotally you get different perceptions when you use Rocky (capital R) vs rocky (lowercase r) with the former’s capitalization seeming to signify the proper noun

To me (the person who formulated the query), “Rocky Horror Picture Show” was clearly not relevant. I knew that I meant Rocky movies. So I was frankly surprised to see such a wide distributions on what I perceived to be an “obvious” query.

What I just did was ask the audience to provide a judgment on the query and document, whereby a grade is assigned indicating the relevance of that document for a query. We can create a judgment list if we accumulate many grades for many query document pairs, such as grading:

- “Rocky III” for q=Rocky as a 4 (very relevant))

- “Happy Gilmore” for q=Rocky as a 1 (very irrelevant)

- “Run Lola Run” for q=movies in Berlin a 3 (moderately relevant)

And so on… And as many reading may know, with enough of this data you can give a search solution’s listing a score on its overall relevance with metrics like nDCG and ERR

Best Method for Judging Search Result Relevance?

Judgments gathered by raters explicitly are known as expert judgments. If we have a query, we might go about sitting real live human beings to review a complete search result and ask them “how relevant is this?”. After fully reviewing, each expert rater assigns a grade (such as 1-4 above) to the item for the query.

As you might guess, this is rather time consuming to do well. Many search applications interpret user behavior on the site (clicking on search results, watching movies, purchasing products, etc) into an implicit grade for a document in that query. We’ll call these implicit judgments. If users seem to watch “Rocky Horror Picture Show” a lot after entering the query “rocky,” we may decide to give that a grade of 3. This implicit grade is based on our own assumptions of how users behave when they feel an item is relevant/irrelevant.

This brings us back to the central question of this article – What is a relevant result? Which item should be brought back to the top? Should we trust the well thought out opinions of live human beings, carefully considering whether an item is relevant? Should we trust our imperfect measures of real users behavior?

As you might suspect both have their flaws.

This panel of experts (sometimes not even representative of users) is not really being forced to solve real problems. Even when thinking carefully, and recruited from the user population, can they really put themselves in a live user’s shoes, with all their many subtle considerations? With the problems users are trying to use the application for? Sitting in front of a query “Notre Dame” isn’t the same as the stressed out high school student researching for their homework the latest fire, as they frantically search for good citations for their paper. That student may need a quick recap, not a detailed writeup. A subtlety we might miss if not looking at real user behavior.

Implicit judgments themselves are imperfect models. It’s often assumed a click is a signal for relevance. Is it? Clicks often happen to inspect an item further, but not finally decide the item is relevant. A conversion is another indication of relevance, but the deeper you go in the funnel, the less data you might gather. Without a conversion, you make even more complicated assumptions (did they read the article? Do they stay on the page for a while?). Even more troublesome, users only interact with what’s presented to them, especially those items presented higher in the search listing. Your search UI may only have interaction data with 5 results for a query – what if the relevant item is buried on page 2 and no one clicks on it?

Of course there are a lot of methods to mitigate these issues, and every search team has its bag of tricks. I’d recommend Max Irwin’s Haystack Keynote if you’re curious about these tricks. But I want to dive deeper into what this notion of ‘relevance’ really means.

What is the “thing” we are chasing in these measures?

Information Needs are Almost Always Incomplete

In search, you might hear about this notion of an information need. The idea is that a user comes to search with a conscious and unconscious specification for what they want. The queries they enter are an imperfect formulation of that information need.

To get at what users really want in their heart of hearts, here’s a list of classes of information needs:

- Informational: Some users need factual answers to their questions (such as in question answering, “When was the Battle of Waterloo?”)

- Compare / Contrast: Some users need to compare / contrast options (such as purchasing shoes or comparing jobs to apply to)

- Research: Users intently gathering a set of items to solve and deepen their understanding of a complex topic, question, or problem (gathering articles to write a school report on a topic)

- Browsing: Some users simply want to passively browse or window shop (what strategy games are on the video game store Steam today)

- Known-Item: Some users want to lookup an item by name (such as a lookup by name or SKU, or a name in your phone’s contact list)

If you reflect on these use cases, users aren’t always conscious of exactly what they want. Users gain awareness of what they want as they search. You start by researching the US Civil War, unsure what you’ll write your research paper about. You may then see results, become more aware of what you want, and focus deeper in on a specific aspect of some specific battle close to your interests or problem you need to solve. Reflect also on shopping; often you start with an imperfect need, like a laptop bag, only to become more aware as you search that what you really want is a “rugged, large laptop backpack suitable for overnights.”

It’s not just that users are not conscious of what they want, they are learning as they go through the search process by refining queries, seeing what comes back, and being enticed by options.

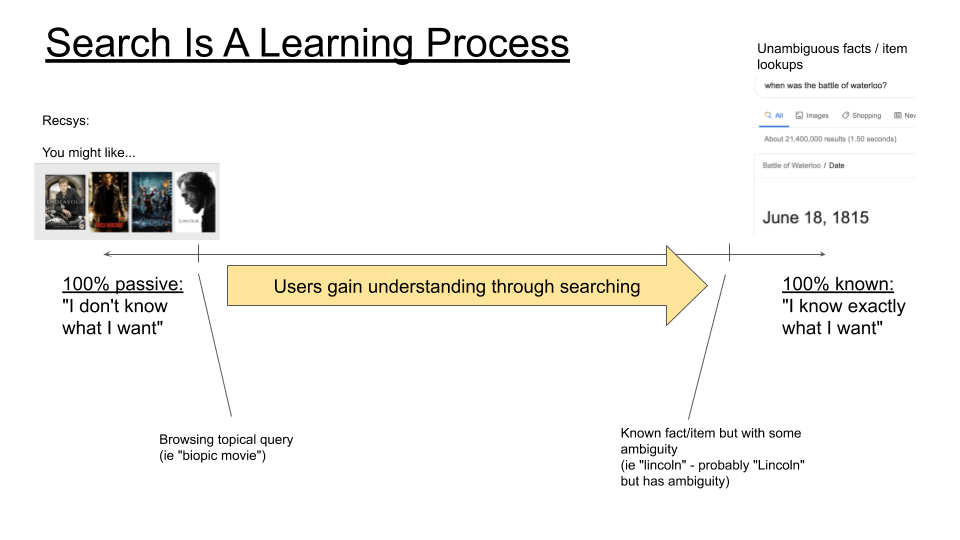

Search users are quite often “in process.” If users knew exactly what they wanted, this would often be just a database lookup by an id, or turn into a pure question answering problem. Nor are they completely passive, which would fall into the realm of recommendation systems. They are on a spectrum that is neither 100% passive nor 100% knowing exactly what they want, as illustrated on the diagram below:

Relevance is about forwarding the process

In reality, this user’s “process” may last months. An ongoing health issue could involve extensive searches over a long period of time. An expensive product search requires a lot of decision-making and research. For this reason, I prefer to focus on what forwards this process or journey they are on.

In the diagram above, I show a spectrum of search use cases, depending on how aware & committed users are to what they want. Users all the way to the right are close to knowing exactly what they want. Users all the way to the left are browsing / exploring some curiosity of theirs, barely a step away from a recommendation system problem.

In search, we help users along this process. We hope passive browsers might be delighted by something they see, and want to take further steps in a new direction, getting closer to taking action, answering their question, or solving their problem. More concretely, they start with an imperfect notion, and gradually get closer to knowing the exact question they want to ask, item they want to buy, kind of job they want to apply for, or articles they want to gather.

Relevance is about deciding whether or not to verb the item

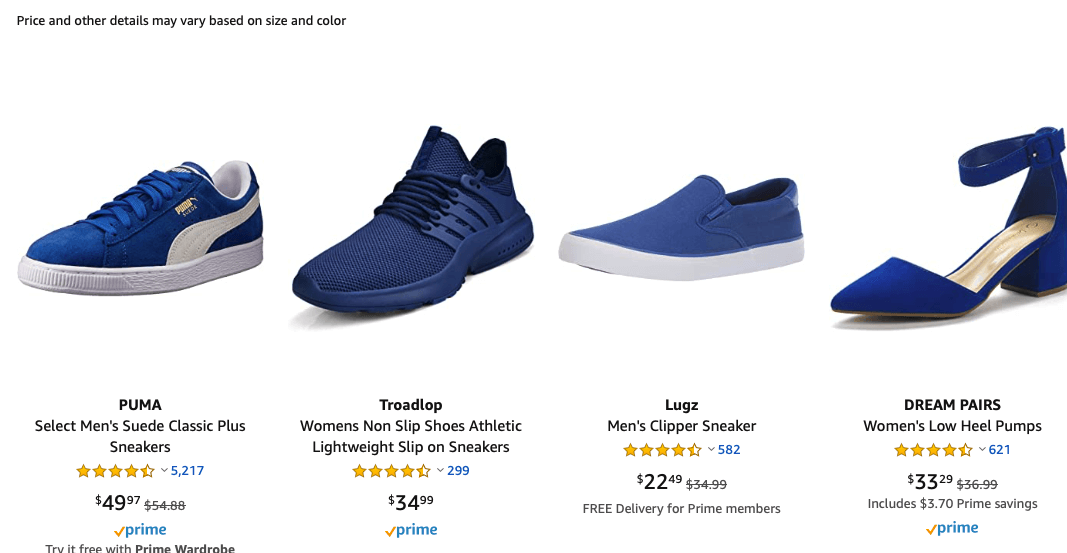

In many contexts, what’s relevant, is also about what users are going to ‘do’ to the item. Many search systems are built with an explicit action in mind. E-Commerce is about buying things, Job search applying, and so on. Similar to the object of a sentence, a search query could also be restated as “I want to buy __” or “I want to apply for ___ job”, where the blank is filled in by a search query. The blank might be “blue shoes” or “software engineer” respectively. Knowing whether I want to “buy” the item, means my decision on relevance, is going to be dictated by factors that lead me to making a good buying decision. Amazon, for example, gives us a lot of information to help us decide whether to buy an item, as shown in the screenshot below showing search results for “blue shoes”:

Not all search systems have very clear action. But for those that do, this verb is clearly part of the relevance conversation. Search users on the right half of the process above are more committed. They have a clear sense they want to do. Even if they are not fully conscious of everything they want, they are on a mission. They will ultimately take a final action or be dissatisfied unable to find an item they want to (verb).

However, it’s also the case, less committed users might not have a clear action they want to take, or that we could readily measure.. Even on more transactional systems like e-commerce or job search, users may start out browsing. For these browsing users, on the far left side of the spectrum above, the “verb” might change to something a bit less committed. Something like “I want to bookmark about __ jobs”.

A word of warning to search teams that simply want to optimize conversions. Supporting the non-committal side of the spectrum is really important. If your search system becomes a resource for more passive browsers, it could also be their system for purchasing products when they learn what they want. Amazon is both a resource for passive product research and buying products. This is one reason while in a Best Buy you’ll look at Amazon. Not just to buy, but for the depth of product information and reviews.

It’s not just relevance, it’s about contrasting options that forward decisions

If most relevance is about being in the middle of a process, moving through the process is about making decisions and evaluating options. This too dictates what we consider ‘relevant’. Different options that contrast with each other help users make decisions on next steps, move through the process, and get closer to the exact thing they want.

For example, passive/browsing job searchers would want to know options at a very high level. They want to see that for a software engineer job in their area, the major themes are .Net and Python jobs. And that most jobs are located downtown. Answering these questions might not just be search, but many other analytics/discovery elements that help users understand the lay of the land, and decide whether to make further steps.

When a job seeker gets more committed to a type of job, they still want to contrast different details. They know they want a “Java programming” job. They see one job with no commute; another that seems to pay more, but requires a long drive. Both are relevant. However highlighting their key differences are more useful than their similarities to a user making a decision.

In these situations, and other search systems like research, e-commerce, and many others, we present users contrasting options to choose from that ought to be both relevant, but different in important ways. The search UI’s job becomes doing it’s best to highlight these differences. A search system that shows five identical Java programming jobs, downtown, won’t help you feel like you made a good decision.

We won’t feel these results are relevant, if they’re not useful as a whole. They won’t feel relevant if they don’t sufficiently differ with each other to give a good overview of all the options scoped within the explicit keywords provided. If search is about the user’s next step on the process, and I can’t understand the most relevant next steps I might take, then search has failed at it’s goal.

This is where classic relevance breaks down, and where it’s good to look at other systems of evaluation. Not just individual results relevance, but the performance of the search results page itself in helping users make additional decisions. This gives a broader point of view, helping to answer:

- Do the results display in a way that emphasizes their qualities relevant to the user (ie perceived relevance?)

- Do I get a good overview of the different options / paths to follow scoped to this keyword?

It’s not just about putting a search results page of “4s” in front of the user, it’s about the best “4s”, that provide good forks in the road. Such a “fork” might mean new topics to explore, product options to consider, or additional factors when buying a house or applying for a job.

So finally what are ‘relevant’ search results?

I think when you put this all together, you can get a picture of what a ‘relevant’ result is to me, at this stage in my career, after fighting too many relevance battles :). To me returning a relevant result increasingly is only half the battle. It’s just as much about a relevant page that serves the user, helping them learn what they don’t know about the options. Options they may ultimately want to (verb) – buy, apply for, etc. Helping them with their own evolving definition of “relevance.”

There will be keywords that need more of browsing treatment / measurement / and optimization. Often these are head queries like “shirt” on an apparel site. What’s “relevant” might be a broad range of options in this category, almost a curated page just for this topic. When do you see users having a better idea of what they want “blue dress shirt”, the pool of relevant possibilities becomes smaller, but includes the needed information to make a decision more specific to that area (‘dress shirt’.) A useful search result page knows those options and contrasts the relevant items most appropriately.

Probably the most important thing is to study and map your user’s “journey” through the search process for your domain from less committed browsers to known-item searchers. Every search application will have different classes of information needs on this spectrum that need their own system of measurement & solutions. They’ll each have a way of demonstrating to the user the ‘next step’ to take, whether a conversion, or simply a further refinement and increased education of the options available on their topic.

Sorry it’s not that easy! Users have so many, increasingly complex demands of search. I’m sure in a few years, my thinking on this will evolve further.

If you’d like to help that evolution and show me what I missed, please do reach out and contact me.