Thanks Flax and Rene Kriegler for partnering to plan a tremendous Haystack EU event in early October. It was gratifying to see the relevance community come together. I love seeing so many good friends and fellow practitioners working together on our mutual struggles in relevance, search, and discovery.

When we originally had the idea for Haystack last year, we wanted to show search teams the honest truth of search relevance work: it’s hard. There’s no silver bullet (not even AI and machine learning). On the flipside, it is “just work” as a client of ours once put it. You put the work in, you get results. If you take shortcuts, well…

True Learning to Rank Stories

Nothing highlighted Haystack’s goal more than our two Learning to Rank (LTR) talks. One from Otto / Shopping24, another from Agnes Van Belle of Text Kernel. Both talks pulled back the veil on real machine learning efforts for two very sophisticated search teams. Even after several iterations, both efforts initially showed to be harming more than helping search quality in A/B tests. Though over time progress was made, it took a good deal of work to get there.

Why?

Both talks pointed to a fundamental product challenge underlying both LTR and hand-tuned search relevance: actually measuring what ‘good search’ is for your domain/product. For many, this means a ‘judgment list’ that grades how good documents are for a query. “Rambo” is clearly an A+ search result when a user searches for “Rambo”. But past simple examples, good judgment lists, that operate at scale, generated from humans or analytics requires strategic thought and planning from many aspects in the business. There’s a lot of “it depends” and many domain-specific gotchas. The takeaway for me was: be wary of ‘one size fits all’ approaches to this problem.

For both teams, the gotcha of “position bias” came up for click-based judgments in different ways. Basically the idea is the same that I write about here. Top results tend to get clicked more than results farther down the result list. Which means “self-learning” search could end up learning bad patterns if your non-machine learning relevance is garbage. Even when you account for position bias, you might still not have enough training data if not enough users actually see a good result for a query. Garbage in, garbage out.

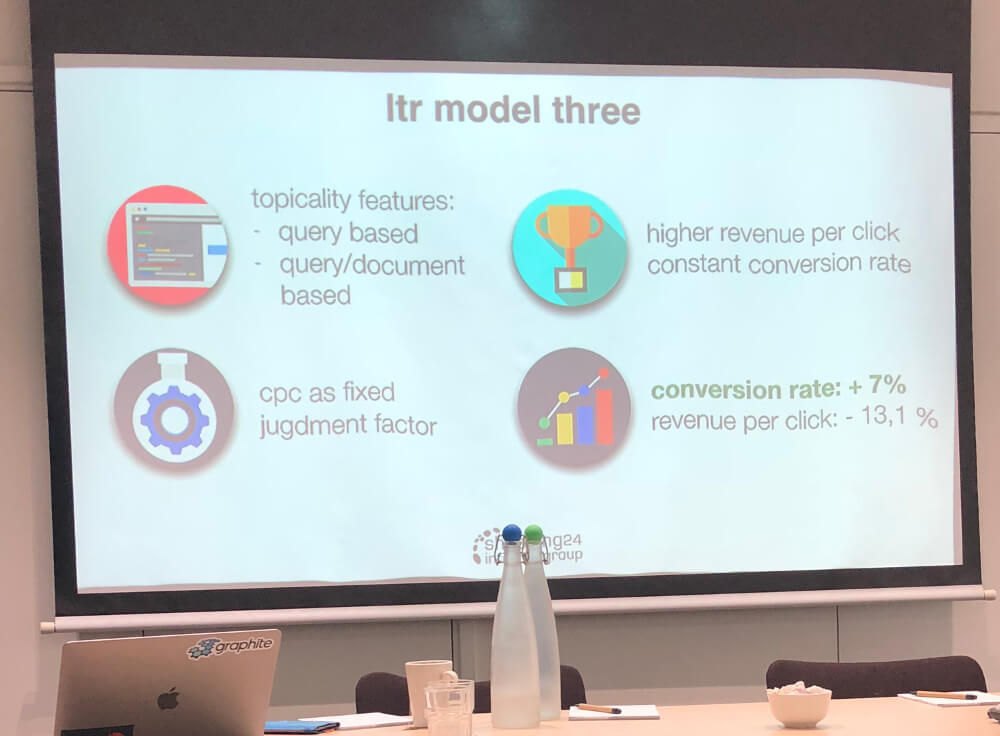

Shopping24’s talk highlights that the long, winding reality of applying Learning to Rank to search depends a lot on measuring what matters to your business (in this case CPC vs Conversion).

Shopping24’s talk highlights that the long, winding reality of applying Learning to Rank to search depends a lot on measuring what matters to your business (in this case CPC vs Conversion).

Another issue was including more than just user relevance in the “grade.” For Shopping24, CPC is another factor that must be included to support their e-commerce business and merchants. René Kriegler started with a blog post I wrote on modifying the LambaMART training algorithm to optimize user-product marketplaces with Learning to Rank, then took it a step further based on an approach mentioned in the original LambdaMART paper for combining two rankers. Torsten’s team also built tooling to allow a floating-point based grade, forking RankyMcRankFace to make FloatyMcFloatFace. This allows fine-grained adjustment of grades to tweak a “grade” up or down based on multiple considerations. Hopefully we get a PR for their great work!

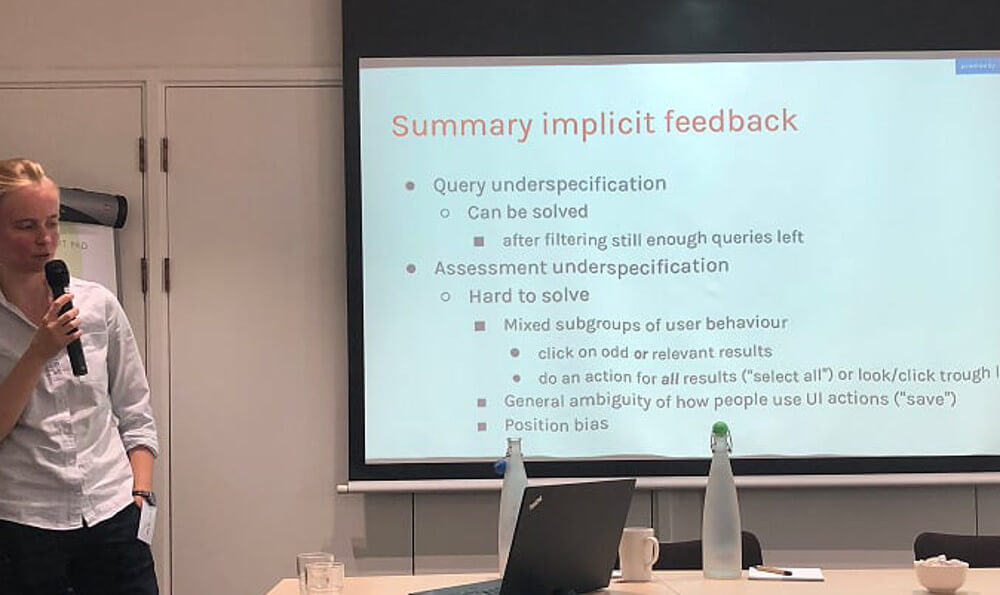

Agnes discusses ‘query underspecification’ – where queries don’t get enough judgments to generate meaningful LTR training/evaluation data

Agnes discusses ‘query underspecification’ – where queries don’t get enough judgments to generate meaningful LTR training/evaluation data

Another issue was combining explicit feedback from users and implicit feedback into a judgment list. Agnes Van Belle talked about how TextKernel/Careerbuilder gave users opportunities to give explicit thumbs-up/thumbs-down feedback. She compared that explicit feedback with implicit feedback gathered via a click model. Both had pros and cons, with the explicit being more accurate but underspecified (few users did thumbs up/thumbs down) while implicit had more bulk feedback, but may not cover all the important use cases (see the slide above). Her talk reminded me of Elizabeth Hauberts Talk at Haystack US that discussed combining user feedback and explicit feedback. In Elizabeth’s talk, the goal was to try to figure out a way to get the best of both worlds: the bulk of analytics with the accuracy of thoughtful domain experts.

Testing, measurement, and experimentation: the silver bullets of search relevance

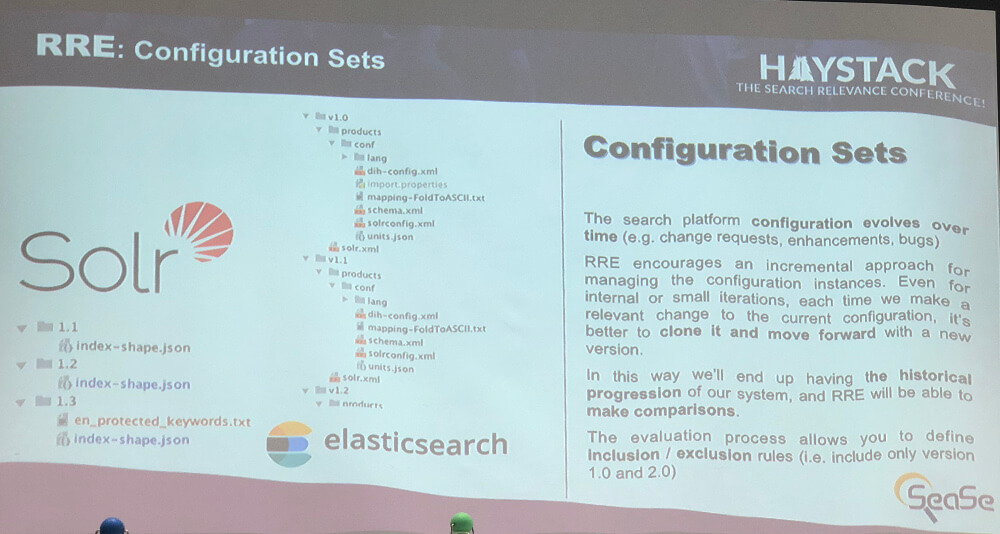

Two other talks showed tools and techniques for gathering judgment lists. Everyone finds the machine learning exciting, but as you may have heard, doing the fun “building a model” part is less than 5% of the work. For search, the hard work of wiring data together to get meaningful judgments is a wheel that’s reinvented over and over again at companies. Allesandro Benedetti and Andreas Gazzarini of Sease showed potential for the community to rally around a tool with their work with Rated Ranking Evaluator or RRE. RRE is a fully-formed continuous-integration framework for search. As we have worked on relevance testing tools, RRE was a breath of fresh air. It’s clear RRE was created by two consultants with a lot of experience with relevance who know what’s needed to test relevance. We’ll be using it on a couple of our projects, and look forward to contributing back.

Rated Ranking Evaluator lets you test a complete search configuration against a database of judgments.

Rated Ranking Evaluator lets you test a complete search configuration against a database of judgments.

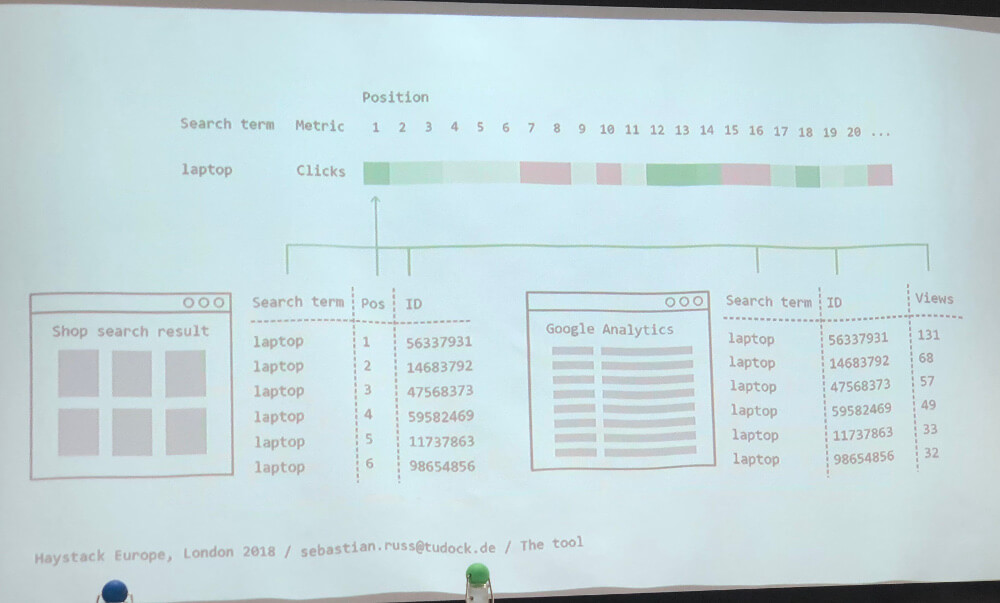

The other talk in this vein was Tudock’s Sebastian Russ’s talk on relevance visualizations. Communicating relevance to non-technical audience can be tough. It seems rather abstract, and business users have a hard time understanding the pros and cons of different relevance approaches. Business users can be prone to cherry-picking their pet query instead of looking at a relevance solution holistically. Sebastian showed up several visualizations he had pioneered, using just Excel, to show the impact of different ranking strategies to these users.

Sebastian Russ from Tudock constructs very accessible relevance visualizations from Google Analytics and a few simple tools!

Sebastian Russ from Tudock constructs very accessible relevance visualizations from Google Analytics and a few simple tools!

The talks were complimented by Karen Renshaw’s broader perspective. Karen Renshaw manages Grainger UK/Zoro’s search team. She discussed her team’s methodological approach to working on relevance problems. She gave good specifics on how Zoro tests, sampling from head and tail queries. How it’s unavoidable at time to not fix the “highest paid person’s” pet query. Karen showed us how working on search is a journey, and never a destination, no matter what the underlying technology. Most importantly: that relevance exists in a user experience ecosystem alongside the UI and other factors. All must be accounted for in the solution!

Karen Renshaw leads search at Zoro/Grainger Global Online – where she thinks a lot about the process of relevance intersecting the technical and business

Karen Renshaw leads search at Zoro/Grainger Global Online – where she thinks a lot about the process of relevance intersecting the technical and business

Boolean Ninjas need our help too!

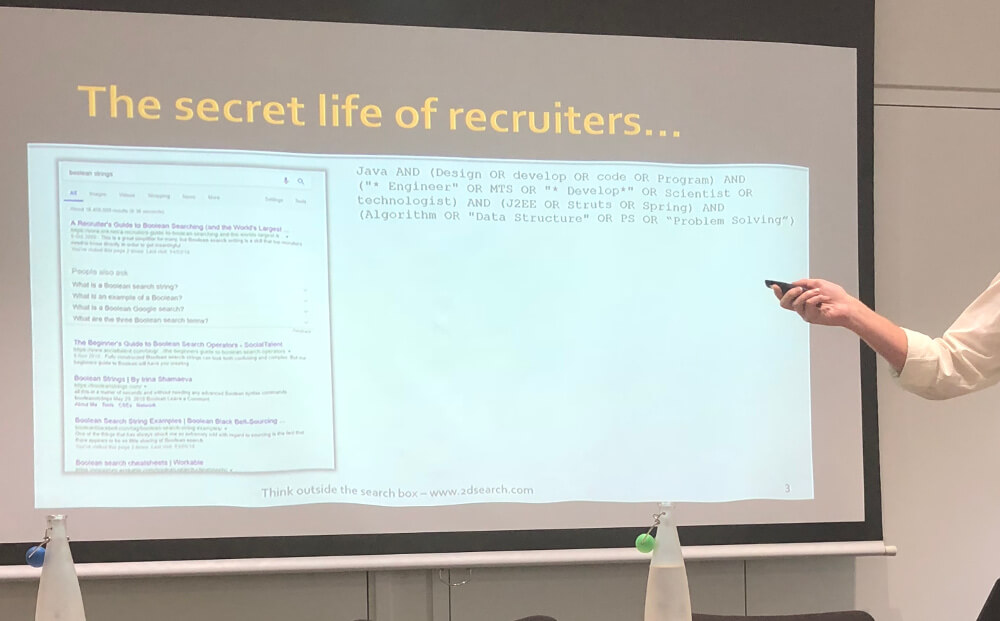

Tony Russel-Rose shows off advanced search syntax and tools for improving their workflow.

Tony Russel-Rose shows off advanced search syntax and tools for improving their workflow.

Tony Russell Rose walked through advanced search syntax use cases. So many industries use advanced search syntaxes: recruiters, paralegals, pharma researchers, and more. In recruiting, Tony pointed out that many of these searchers pride themselves on their skill in crafting queries – calling themselves “boolean ninjas”. Tony highlighted his tooling to help these searchers craft and execute these verbose query plans. Apparently, over 90% of queries that these users copy-paste into the search box have errors that can change the meaning of the query. Tony helps create something of an IDE for building advanced queries, called 2D search, that does things like suggest query terms, more easily group query clauses, and detect errors. Tony’s talk was a nice reminder of a use case that, while not sexy, is very prevalent and one that every relevance engineer needs to account for: that of the advanced searcher.

See you in 2019!

Keep your eyes peeled for more Haystack goodness in 2019! We hope to keep this community alive and meeting regularly to discuss the challenges in our field. Specifically – how might we continue to collaborate on tools, approaches, and open source software to support each other in our mutual challenges.